Cycle Encoding of a StyleGAN Encoder for Improved Reconstruction and Editability

Paper and Code

Jul 19, 2022

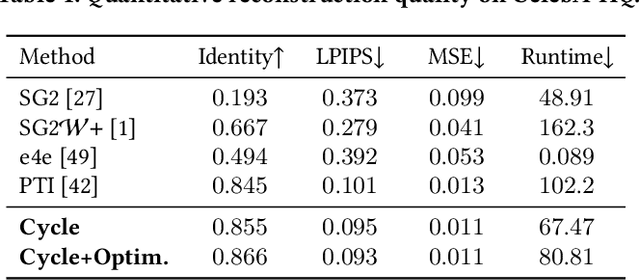

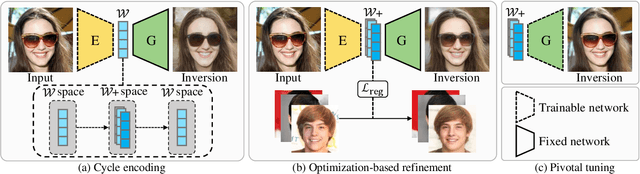

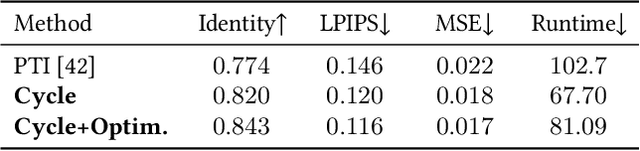

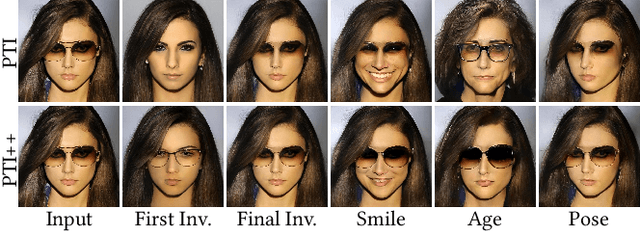

GAN inversion aims to invert an input image into the latent space of a pre-trained GAN. Despite the recent advances in GAN inversion, there remain challenges to mitigate the tradeoff between distortion and editability, i.e. reconstructing the input image accurately and editing the inverted image with a small visual quality drop. The recently proposed pivotal tuning model makes significant progress towards reconstruction and editability, by using a two-step approach that first inverts the input image into a latent code, called pivot code, and then alters the generator so that the input image can be accurately mapped into the pivot code. Here, we show that both reconstruction and editability can be improved by a proper design of the pivot code. We present a simple yet effective method, named cycle encoding, for a high-quality pivot code. The key idea of our method is to progressively train an encoder in varying spaces according to a cycle scheme: W->W+->W. This training methodology preserves the properties of both W and W+ spaces, i.e. high editability of W and low distortion of W+. To further decrease the distortion, we also propose to refine the pivot code with an optimization-based method, where a regularization term is introduced to reduce the degradation in editability. Qualitative and quantitative comparisons to several state-of-the-art methods demonstrate the superiority of our approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge