Cryptanalytic Extraction of Neural Network Models

Paper and Code

Mar 10, 2020

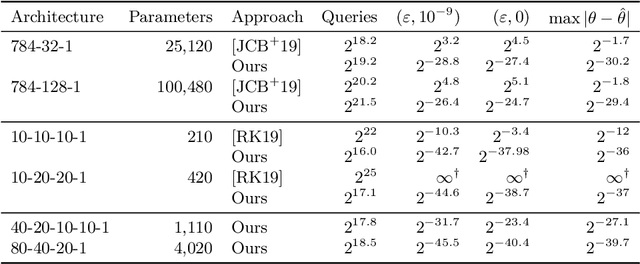

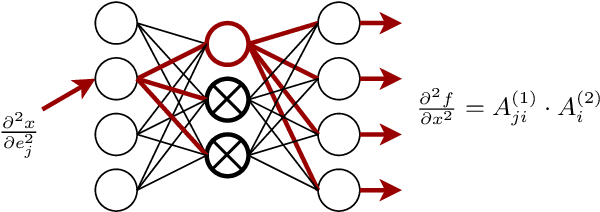

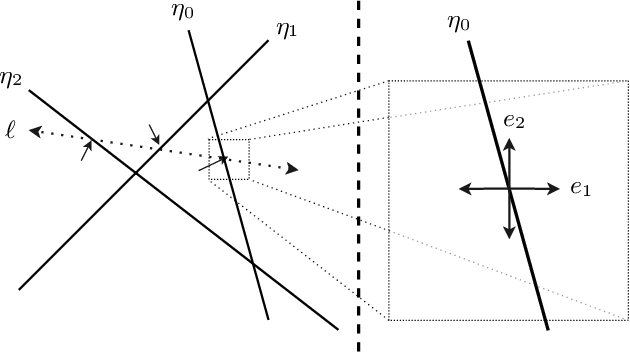

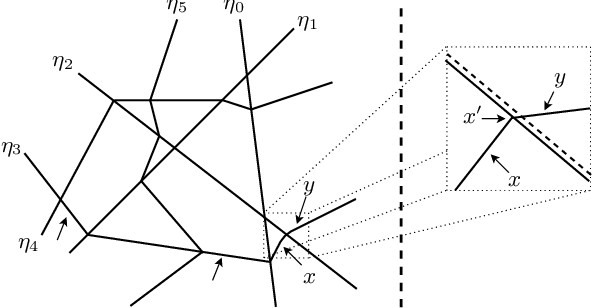

We argue that the machine learning problem of model extraction is actually a cryptanalytic problem in disguise, and should be studied as such. Given oracle access to a neural network, we introduce a differential attack that can efficiently steal the parameters of the remote model up to floating point precision. Our attack relies on the fact that ReLU neural networks are piecewise linear functions, and that queries at the critical points reveal information about the model parameters. We evaluate our attack on multiple neural network models and extract models that are 2^20 times more precise and require 100x fewer queries than prior work. For example, we extract a 100,000 parameter neural network trained on the MNIST digit recognition task with 2^21.5 queries in under an hour, such that the extracted model agrees with the oracle on all inputs up to a worst-case error of 2^-25, or a model with 4,000 parameters in 2^18.5 queries with worst-case error of 2^-40.4.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge