Cross-Modal Manifold Learning for Cross-modal Retrieval

Paper and Code

Dec 19, 2016

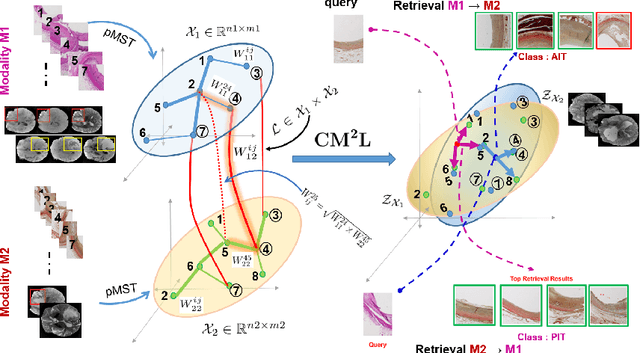

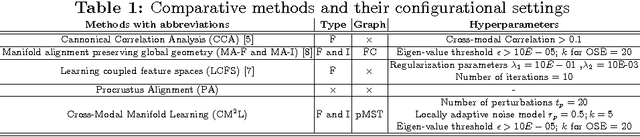

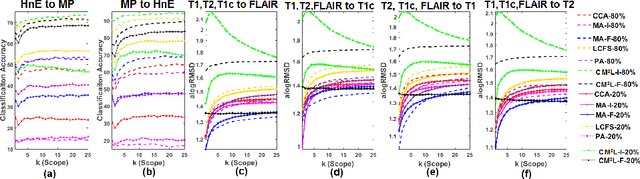

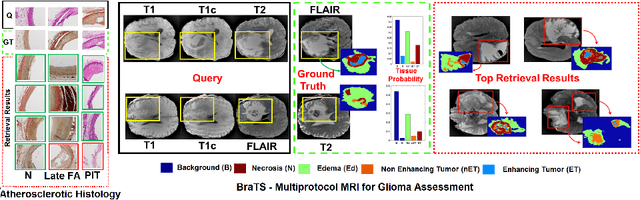

This paper presents a new scalable algorithm for cross-modal similarity preserving retrieval in a learnt manifold space. Unlike existing approaches that compromise between preserving global and local geometries, the proposed technique respects both simultaneously during manifold alignment. The global topologies are maintained by recovering underlying mapping functions in the joint manifold space by deploying partially corresponding instances. The inter-, and intra-modality affinity matrices are then computed to reinforce original data skeleton using perturbed minimum spanning tree (pMST), and maximizing the affinity among similar cross-modal instances, respectively. The performance of proposed algorithm is evaluated upon two multimodal image datasets (coronary atherosclerosis histology and brain MRI) for two applications: classification, and regression. Our exhaustive validations and results demonstrate the superiority of our technique over comparative methods and its feasibility for improving computer-assisted diagnosis systems, where disease-specific complementary information shall be aggregated and interpreted across modalities to form the final decision.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge