Coreset selection can accelerate quantum machine learning models with provable generalization

Paper and Code

Sep 19, 2023

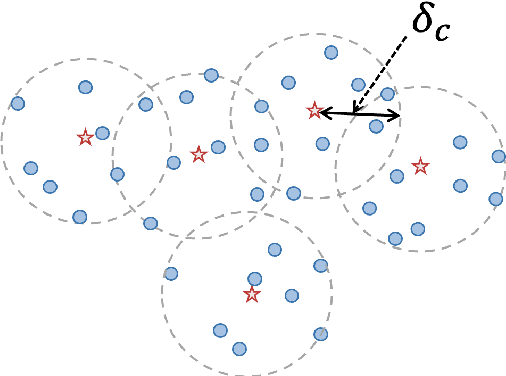

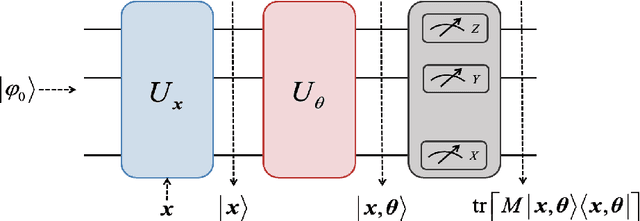

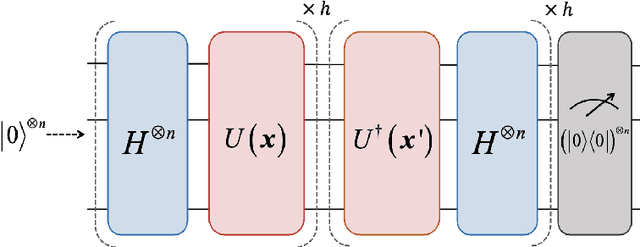

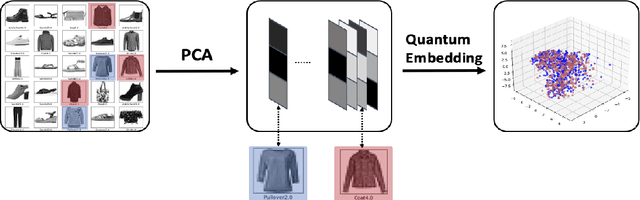

Quantum neural networks (QNNs) and quantum kernels stand as prominent figures in the realm of quantum machine learning, poised to leverage the nascent capabilities of near-term quantum computers to surmount classical machine learning challenges. Nonetheless, the training efficiency challenge poses a limitation on both QNNs and quantum kernels, curbing their efficacy when applied to extensive datasets. To confront this concern, we present a unified approach: coreset selection, aimed at expediting the training of QNNs and quantum kernels by distilling a judicious subset from the original training dataset. Furthermore, we analyze the generalization error bounds of QNNs and quantum kernels when trained on such coresets, unveiling the comparable performance with those training on the complete original dataset. Through systematic numerical simulations, we illuminate the potential of coreset selection in expediting tasks encompassing synthetic data classification, identification of quantum correlations, and quantum compiling. Our work offers a useful way to improve diverse quantum machine learning models with a theoretical guarantee while reducing the training cost.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge