Convergence Guarantees for Gaussian Process Approximations Under Several Observation Models

Paper and Code

Jan 29, 2020

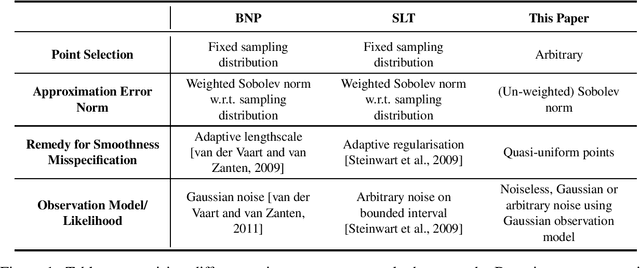

Gaussian processes are ubiquitous in statistical analysis, machine learning and applied mathematics. They provide a flexible modelling framework for approximating functions, whilst simultaneously quantifying our uncertainty about this task in a computationally tractable manner. An important question is whether these approximations will be accurate, and if so how accurate, given our various modelling choices and the difficulty of the problem. This is of practical relevance, since the answer informs our choice of model and sampling distribution for a given application. Our paper provides novel approximation guarantees for Gaussian process models based on covariance functions with finite smoothness, such as the Mat\'ern and Wendland covariance functions. They are derived from a sampling inequality which facilitates a systematic approach to obtaining upper bounds on Sobolev norms in terms of properties of the design used to collect data. This approach allows us to refine some existing results which apply in the misspecified smoothness setting and which allow for adaptive selection of hyperparameters. However, the main novelty in this paper is that our results cover a wide range of observation models including interpolation, approximation with deterministic corruption and regression with Gaussian noise.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge