Context-aware and Scale-insensitive Temporal Repetition Counting

Paper and Code

May 18, 2020

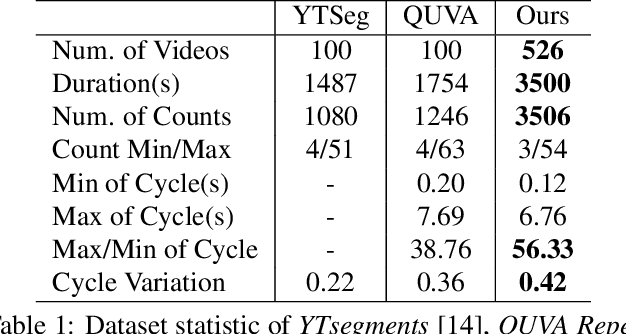

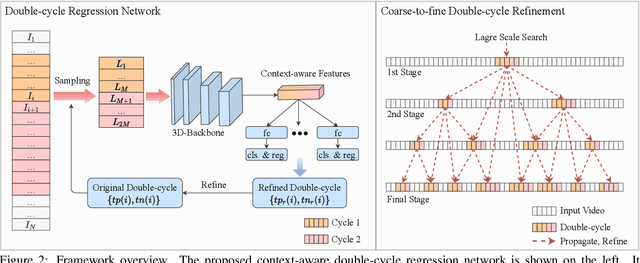

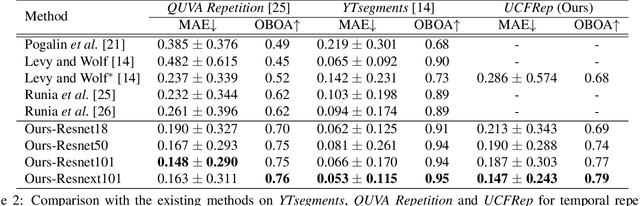

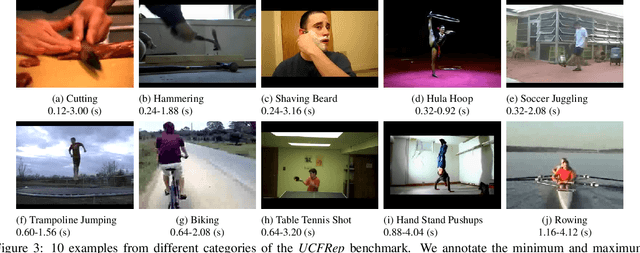

Temporal repetition counting aims to estimate the number of cycles of a given repetitive action. Existing deep learning methods assume repetitive actions are performed in a fixed time-scale, which is invalid for the complex repetitive actions in real life. In this paper, we tailor a context-aware and scale-insensitive framework, to tackle the challenges in repetition counting caused by the unknown and diverse cycle-lengths. Our approach combines two key insights: (1) Cycle lengths from different actions are unpredictable that require large-scale searching, but, once a coarse cycle length is determined, the variety between repetitions can be overcome by regression. (2) Determining the cycle length cannot only rely on a short fragment of video but a contextual understanding. The first point is implemented by a coarse-to-fine cycle refinement method. It avoids the heavy computation of exhaustively searching all the cycle lengths in the video, and, instead, it propagates the coarse prediction for further refinement in a hierarchical manner. We secondly propose a bidirectional cycle length estimation method for a context-aware prediction. It is a regression network that takes two consecutive coarse cycles as input, and predicts the locations of the previous and next repetitive cycles. To benefit the training and evaluation of temporal repetition counting area, we construct a new and largest benchmark, which contains 526 videos with diverse repetitive actions. Extensive experiments show that the proposed network trained on a single dataset outperforms state-of-the-art methods on several benchmarks, indicating that the proposed framework is general enough to capture repetition patterns across domains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge