Content-Agnostic Moderation for Stance-Neutral Recommendation

Paper and Code

May 29, 2024

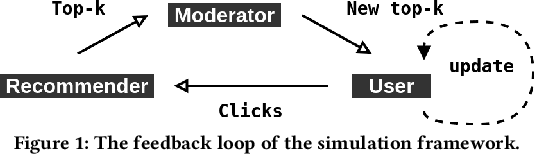

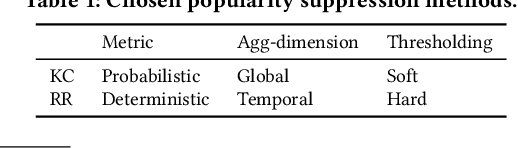

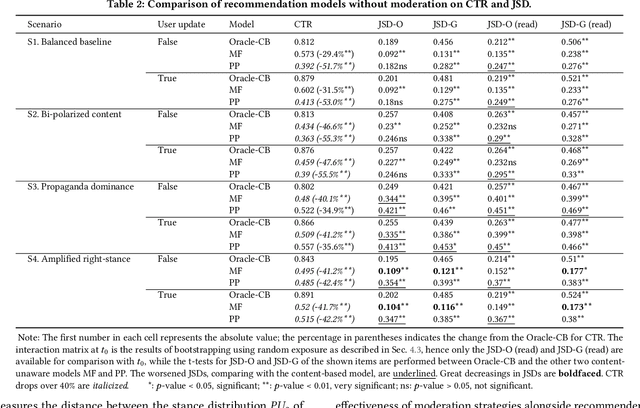

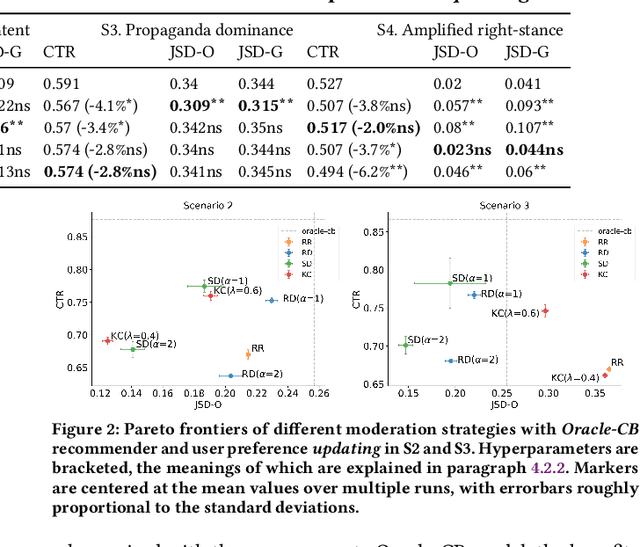

Personalized recommendation systems often drive users towards more extreme content, exacerbating opinion polarization. While (content-aware) moderation has been proposed to mitigate these effects, such approaches risk curtailing the freedom of speech and of information. To address this concern, we propose and explore the feasibility of \emph{content-agnostic} moderation as an alternative approach for reducing polarization. Content-agnostic moderation does not rely on the actual content being moderated, arguably making it less prone to forms of censorship. We establish theoretically that content-agnostic moderation cannot be guaranteed to work in a fully generic setting. However, we show that it can often be effectively achieved in practice with plausible assumptions. We introduce two novel content-agnostic moderation methods that modify the recommendations from the content recommender to disperse user-item co-clusters without relying on content features. To evaluate the potential of content-agnostic moderation in controlled experiments, we built a simulation environment to analyze the closed-loop behavior of a system with a given set of users, recommendation system, and moderation approach. Through comprehensive experiments in this environment, we show that our proposed moderation methods significantly enhance stance neutrality and maintain high recommendation quality across various data scenarios. Our results indicate that achieving stance neutrality without direct content information is not only feasible but can also help in developing more balanced and informative recommendation systems without substantially degrading user engagement.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge