Constrained Machine Learning: The Bagel Framework

Paper and Code

Dec 02, 2021

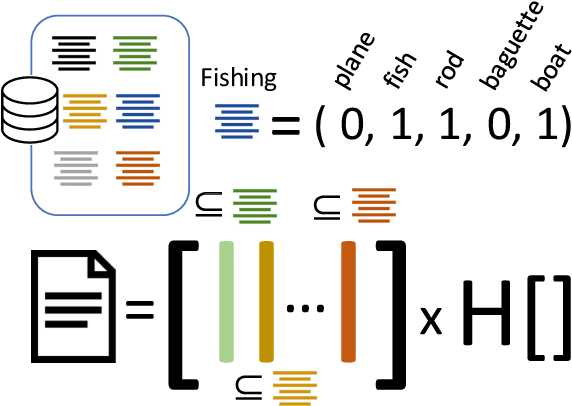

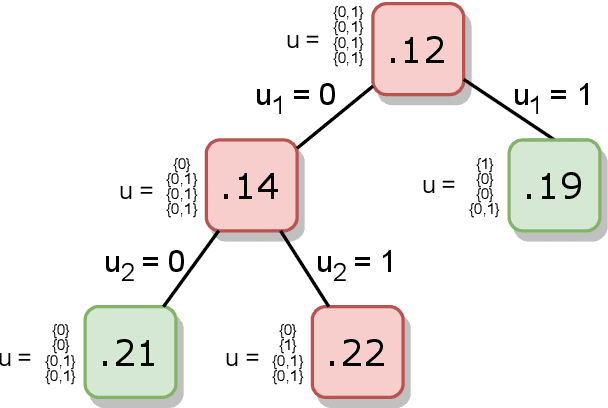

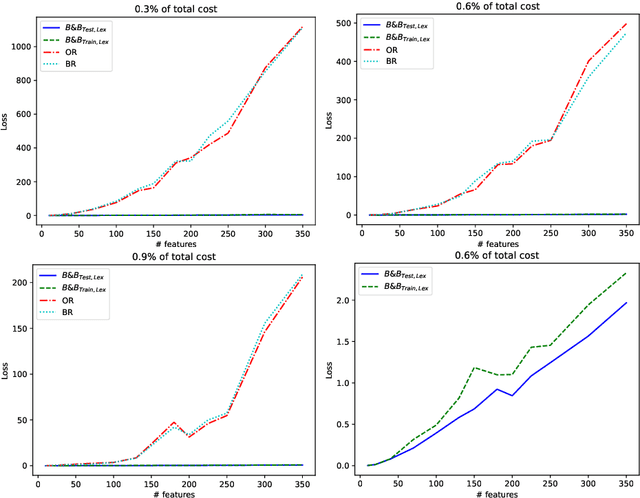

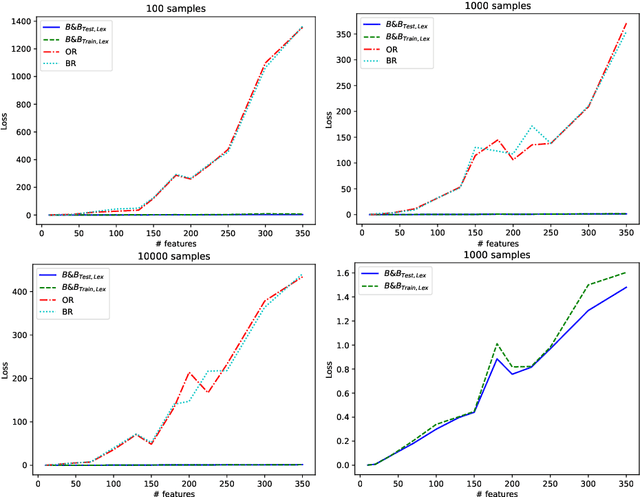

Machine learning models are widely used for real-world applications, such as document analysis and vision. Constrained machine learning problems are problems where learned models have to both be accurate and respect constraints. For continuous convex constraints, many works have been proposed, but learning under combinatorial constraints is still a hard problem. The goal of this paper is to broaden the modeling capacity of constrained machine learning problems by incorporating existing work from combinatorial optimization. We propose first a general framework called BaGeL (Branch, Generate and Learn) which applies Branch and Bound to constrained learning problems where a learning problem is generated and trained at each node until only valid models are obtained. Because machine learning has specific requirements, we also propose an extended table constraint to split the space of hypotheses. We validate the approach on two examples: a linear regression under configuration constraints and a non-negative matrix factorization with prior knowledge for latent semantics analysis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge