Consistency Regularization with Generative Adversarial Networks for Semi-Supervised Image Classification

Paper and Code

Jul 08, 2020

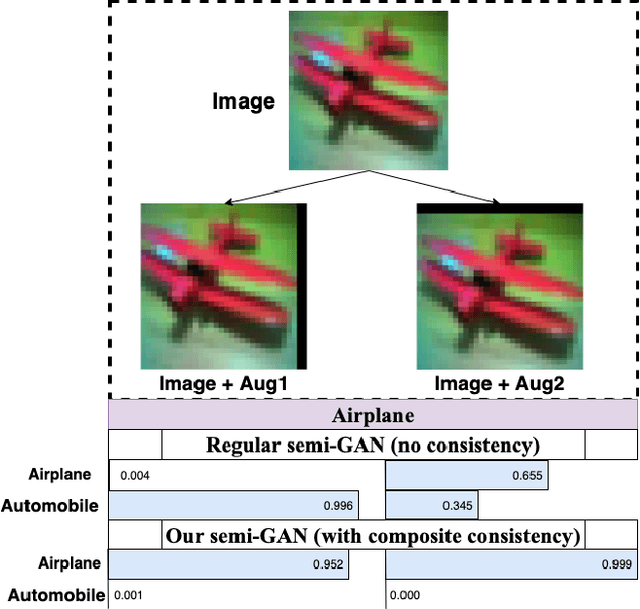

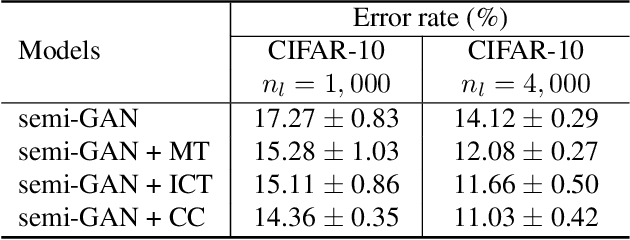

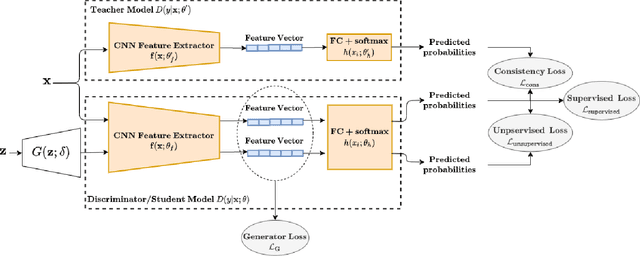

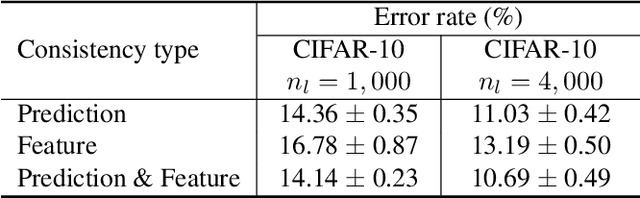

Generative Adversarial Networks (GANs) based semi-supervised learning (SSL) approaches are shown to improve classification performance by utilizing a large number of unlabeled samples in conjunction with limited labeled samples. However, their performance still lags behind the state-of-the-art non-GAN based SSL approaches. One main reason we identify is the lack of consistency in class probability predictions on the same image under local perturbations. This problem was addressed in the past in a generic setting using the label consistency regularization, which enforces the class probability predictions for an input image to be unchanged under various semantic-preserving perturbations. In this work, we incorporate the consistency regularization in the vanilla semi-GAN to address this critical limitation. In particular, we present a new composite consistency regularization method which, in spirit, combines two well-known consistency-based techniques -- Mean Teacher and Interpolation Consistency Training. We demonstrate the efficacy of our approach on two SSL image classification benchmark datasets, SVHN and CIFAR-10. Our experiments show that this new composite consistency regularization based semi-GAN significantly improves its performance and achieves new state-of-the-art performance among GAN-based SSL approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge