Consistencies and inconsistencies between model selection and link prediction in networks

Paper and Code

Jun 28, 2018

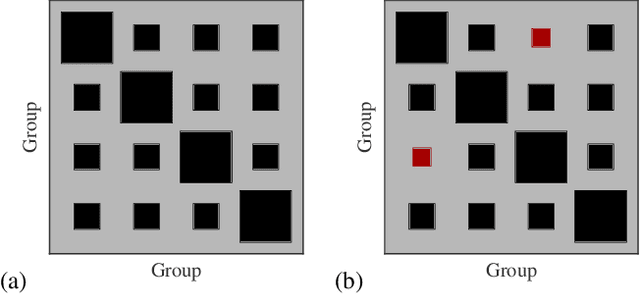

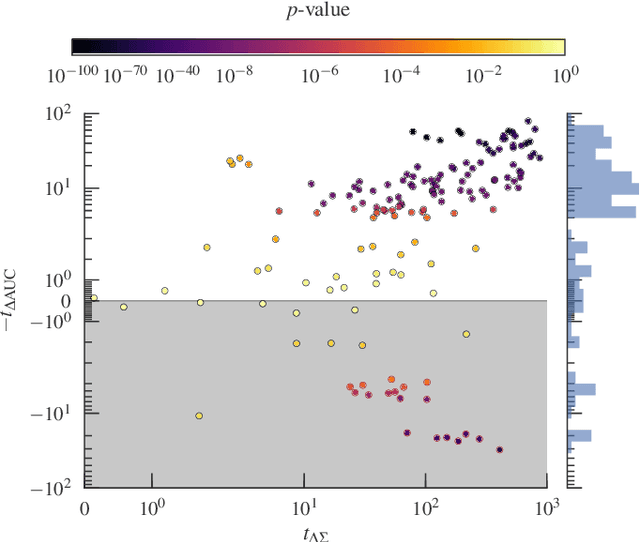

A principled approach to understand network structures is to formulate generative models. Given a collection of models, however, an outstanding key task is to determine which one provides a more accurate description of the network at hand, discounting statistical fluctuations. This problem can be approached using two principled criteria that at first may seem equivalent: selecting the most plausible model in terms of its posterior probability; or selecting the model with the highest predictive performance in terms of identifying missing links. Here we show that while these two approaches yield consistent results in most of cases, there are also notable instances where they do not, that is, where the most plausible model is not the most predictive. We show that in the latter case the improvement of predictive performance can in fact lead to overfitting both in artificial and empirical settings. Furthermore, we show that, in general, the predictive performance is higher when we average over collections of models that are individually less plausible, than when we consider only the single most plausible model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge