Confidence Calibration for Domain Generalization under Covariate Shift

Paper and Code

Apr 01, 2021

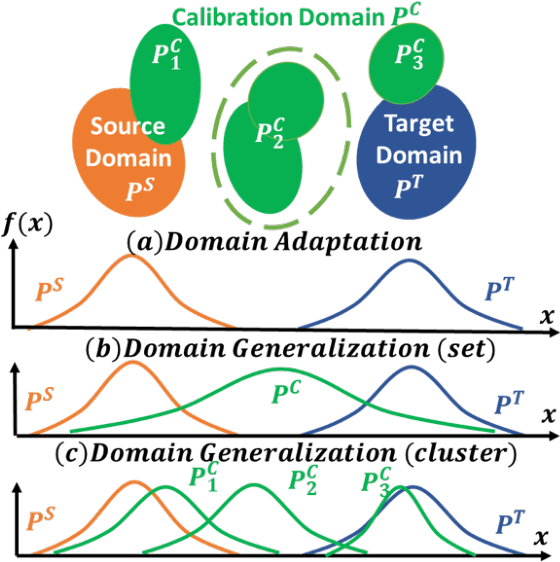

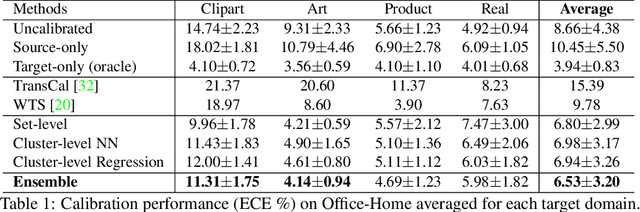

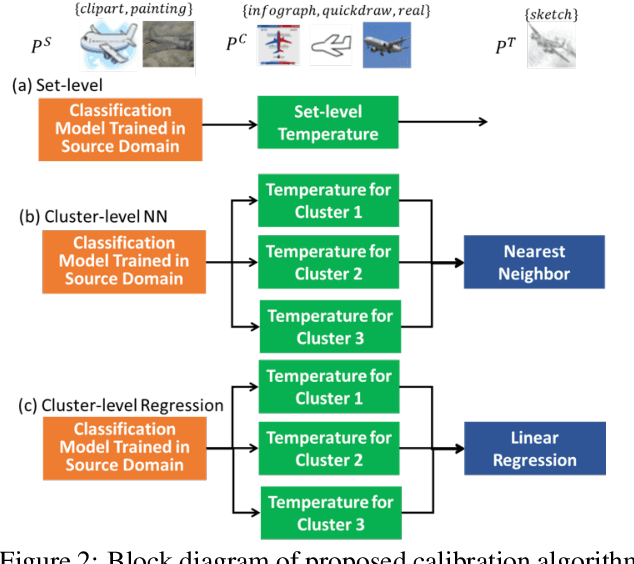

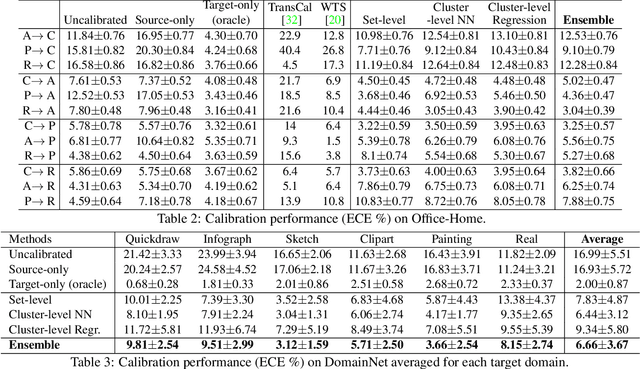

Existing calibration algorithms address the problem of covariate shift via unsupervised domain adaptation. However, these methods suffer from the following limitations: 1) they require unlabeled data from the target domain, which may not be available at the stage of calibration in real-world applications and 2) their performances heavily depend on the disparity between the distributions of the source and target domains. To address these two limitations, we present novel calibration solutions via domain generalization which, to the best of our knowledge, are the first of their kind. Our core idea is to leverage multiple calibration domains to reduce the effective distribution disparity between the target and calibration domains for improved calibration transfer without needing any data from the target domain. We provide theoretical justification and empirical experimental results to demonstrate the effectiveness of our proposed algorithms. Compared against the state-of-the-art calibration methods designed for domain adaptation, we observe a decrease of 8.86 percentage points in expected calibration error, equivalently an increase of 35 percentage points in improvement ratio, for multi-class classification on the Office-Home dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge