Compatible features for Monotonic Policy Improvement

Paper and Code

Oct 30, 2019

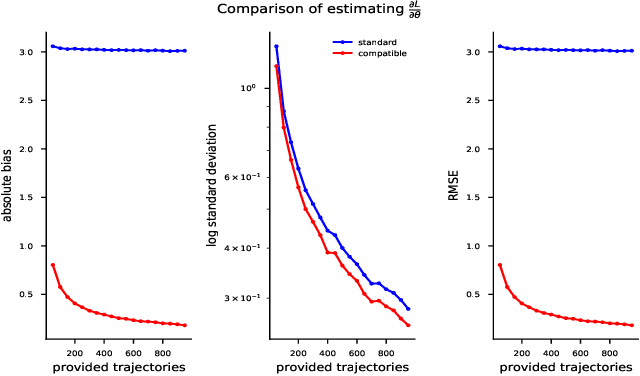

Recent policy optimization approaches have achieved substantial empirical success by constructing surrogate optimization objectives. The Approximate Policy Iteration objective (Schulman et al., 2015a; Kakade and Langford, 2002) has become a standard optimization target for reinforcement learning problems. Using this objective in practice requires an estimator of the advantage function. Policy optimization methods such as those proposed in Schulman et al. (2015b) estimate the advantages using a parametric critic. In this work we establish conditions under which the parametric approximation of the critic does not introduce bias to the updates of surrogate objective. These results hold for a general class of parametric policies, including deep neural networks. We obtain a result analogous to the compatible features derived for the original Policy Gradient Theorem (Sutton et al., 1999). As a result, we also identify a previously unknown bias that current state-of-the-art policy optimization algorithms (Schulman et al., 2015a, 2017) have introduced by not employing these compatible features.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge