Compactness Score: A Fast Filter Method for Unsupervised Feature Selection

Paper and Code

Jan 31, 2022

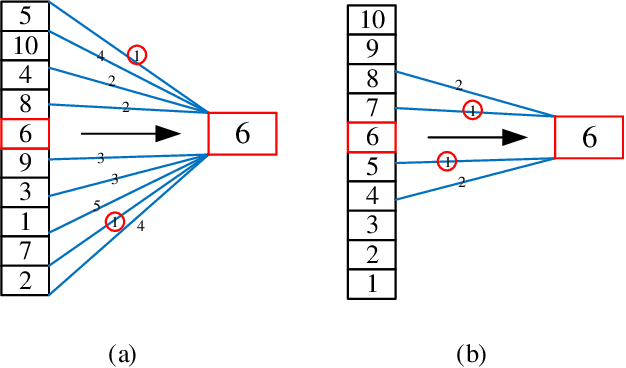

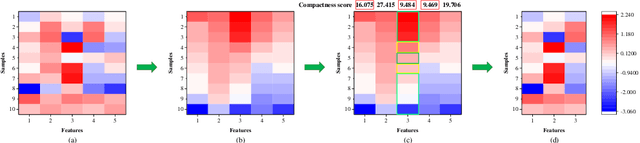

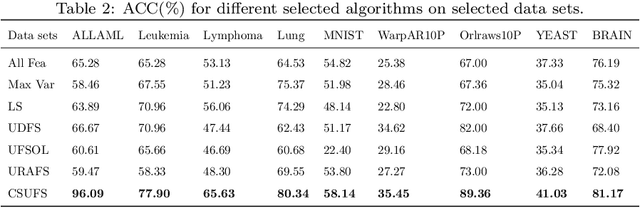

For feature engineering, feature selection seems to be an important research content in which is anticipated to select "excellent" features from candidate ones. Different functions can be realized through feature selection, such as dimensionality reduction, model effect improvement, and model performance improvement. Along with the flourish of the information age, huge amounts of high-dimensional data are generated day by day, while we need to spare great efforts and time to label such data. Therefore, various algorithms are proposed to address such data, among which unsupervised feature selection has attracted tremendous interests. In many classification tasks, researchers found that data seem to be usually close to each other if they are from the same class; thus, local compactness is of great importance for the evaluation of a feature. In this manuscript, we propose a fast unsupervised feature selection method, named as, Compactness Score (CSUFS), to select desired features. To demonstrate the efficiency and accuracy, several data sets are chosen with intensive experiments being performed. Later, the effectiveness and superiority of our method are revealed through addressing clustering tasks. Here, the performance is indicated by several well-known evaluation metrics, while the efficiency is reflected by the corresponding running time. As revealed by the simulation results, our proposed algorithm seems to be more accurate and efficient compared with existing algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge