Combinations of Adaptive Filters

Paper and Code

Dec 22, 2021

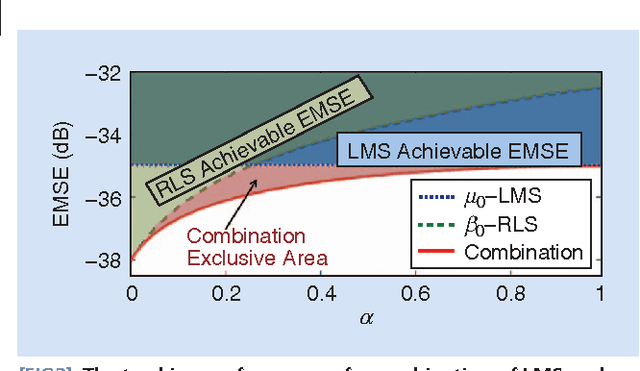

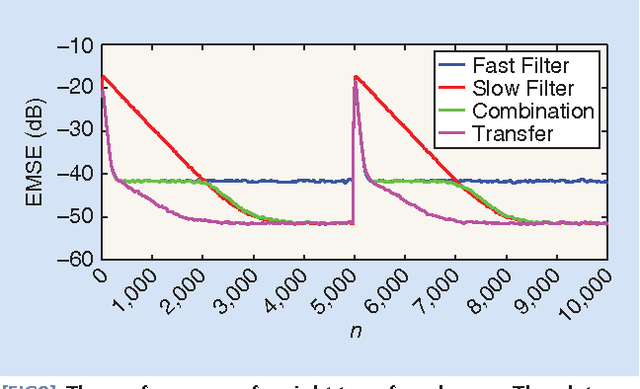

Adaptive filters are at the core of many signal processing applications, ranging from acoustic noise supression to echo cancelation, array beamforming, channel equalization, to more recent sensor network applications in surveillance, target localization, and tracking. A trending approach in this direction is to recur to in-network distributed processing in which individual nodes implement adaptation rules and diffuse their estimation to the network. When the a priori knowledge about the filtering scenario is limited or imprecise, selecting the most adequate filter structure and adjusting its parameters becomes a challenging task, and erroneous choices can lead to inadequate performance. To address this difficulty, one useful approach is to rely on combinations of adaptive structures. The combination of adaptive filters exploits to some extent the same divide and conquer principle that has also been successfully exploited by the machine-learning community (e.g., in bagging or boosting). In particular, the problem of combining the outputs of several learning algorithms (mixture of experts) has been studied in the computational learning field under a different perspective: rather than studying the expected performance of the mixture, deterministic bounds are derived that apply to individual sequences and, therefore, reflect worst-case scenarios. These bounds require assumptions different from the ones typically used in adaptive filtering, which is the emphasis of this overview article. We review the key ideas and principles behind these combination schemes, with emphasis on design rules. We also illustrate their performance with a variety of examples.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge