Collision Avoidance in Tightly-Constrained Environments without Coordination: a Hierarchical Control Approach

Paper and Code

Nov 01, 2020

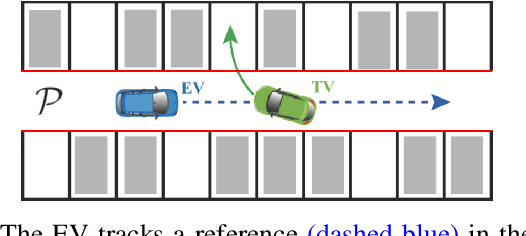

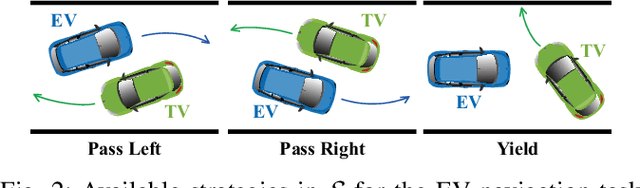

We present a hierarchical control approach for maneuvering an autonomous vehicle (AV) in a tightly-constrained environment where other moving AVs and/or human driven vehicles are present. A two-level hierarchy is proposed: a high-level data-driven strategy predictor and a lower-level model-based feedback controller. The strategy predictor maps a high-dimensional environment encoding into a set of high-level strategies. Our approach uses data collected on an offline simulator to train a neural network model as the strategy predictor. Depending on the online selected strategy, a set of time-varying hyperplanes in the AV's motion space is generated and included in the lower level control. The latter is a Strategy-Guided Optimization-Based Collision Avoidance (SG-OBCA) algorithm where the strategy-dependent hyperplane constraints are used to drive a model-based receding horizon controller towards a predicted feasible area. The strategy also informs switching from the SG-OBCA control policy to a safety or emergency control policy. We demonstrate the effectiveness of the proposed data-driven hierarchical control framework in simulations and experiments on a 1/10 scale autonomous car platform where the strategy-guided approach outperforms a model predictive control baseline in both cases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge