Code-switching Language Modeling With Bilingual Word Embeddings: A Case Study for Egyptian Arabic-English

Paper and Code

Sep 24, 2019

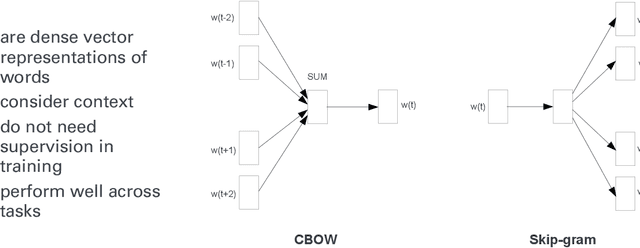

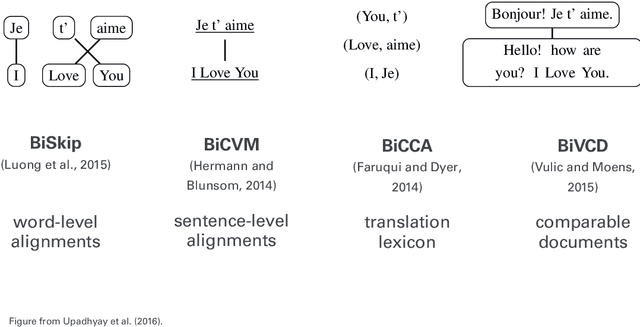

Code-switching (CS) is a widespread phenomenon among bilingual and multilingual societies. The lack of CS resources hinders the performance of many NLP tasks. In this work, we explore the potential use of bilingual word embeddings for code-switching (CS) language modeling (LM) in the low resource Egyptian Arabic-English language. We evaluate different state-of-the-art bilingual word embeddings approaches that require cross-lingual resources at different levels and propose an innovative but simple approach that jointly learns bilingual word representations without the use of any parallel data, relying only on monolingual and a small amount of CS data. While all representations improve CS LM, ours performs the best and improves perplexity 33.5% relative over the baseline.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge