Code Integrity Attestation for PLCs using Black Box Neural Network Predictions

Paper and Code

Jun 15, 2021

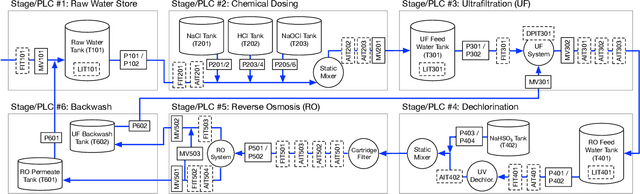

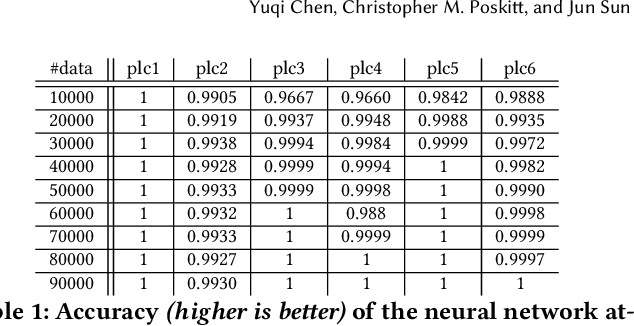

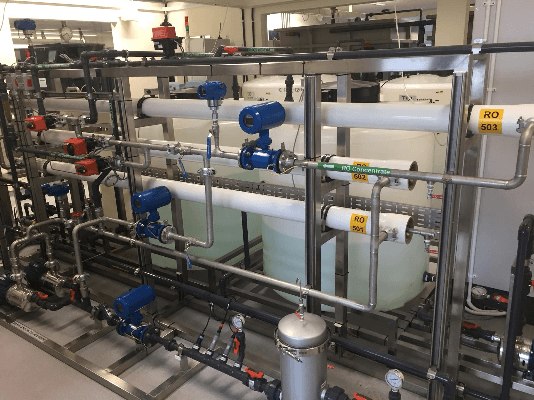

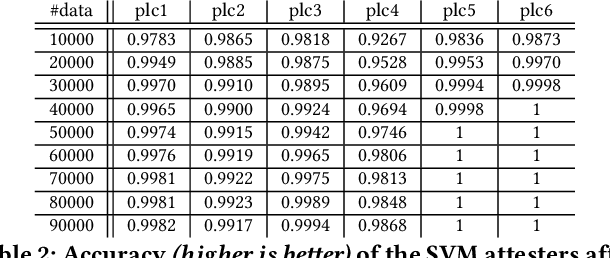

Cyber-physical systems (CPSs) are widespread in critical domains, and significant damage can be caused if an attacker is able to modify the code of their programmable logic controllers (PLCs). Unfortunately, traditional techniques for attesting code integrity (i.e. verifying that it has not been modified) rely on firmware access or roots-of-trust, neither of which proprietary or legacy PLCs are likely to provide. In this paper, we propose a practical code integrity checking solution based on privacy-preserving black box models that instead attest the input/output behaviour of PLC programs. Using faithful offline copies of the PLC programs, we identify their most important inputs through an information flow analysis, execute them on multiple combinations to collect data, then train neural networks able to predict PLC outputs (i.e. actuator commands) from their inputs. By exploiting the black box nature of the model, our solution maintains the privacy of the original PLC code and does not assume that attackers are unaware of its presence. The trust instead comes from the fact that it is extremely hard to attack the PLC code and neural networks at the same time and with consistent outcomes. We evaluated our approach on a modern six-stage water treatment plant testbed, finding that it could predict actuator states from PLC inputs with near-100% accuracy, and thus could detect all 120 effective code mutations that we subjected the PLCs to. Finally, we found that it is not practically possible to simultaneously modify the PLC code and apply discreet adversarial noise to our attesters in a way that leads to consistent (mis-)predictions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge