Closed-Loop Neural Interfaces with Embedded Machine Learning

Paper and Code

Oct 21, 2020

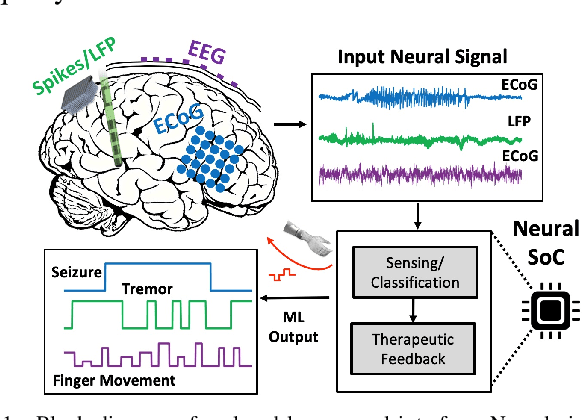

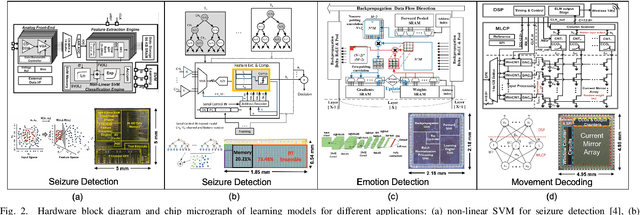

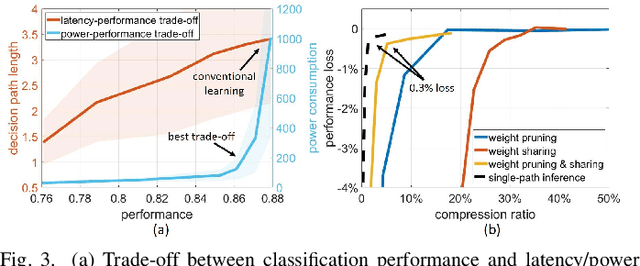

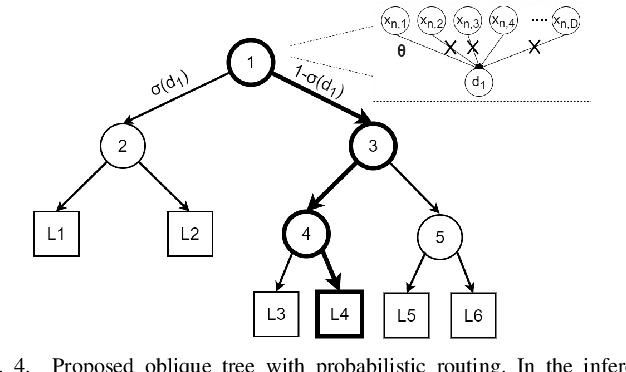

Neural interfaces capable of multi-site electrical recording, on-site signal classification, and closed-loop therapy are critical for the diagnosis and treatment of neurological disorders. However, deploying machine learning algorithms on low-power neural devices is challenging, given the tight constraints on computational and memory resources for such devices. In this paper, we review the recent developments in embedding machine learning in neural interfaces, with a focus on design trade-offs and hardware efficiency. We also present our optimized tree-based model for low-power and memory-efficient classification of neural signal in brain implants. Using energy-aware learning and model compression, we show that the proposed oblique trees can outperform conventional machine learning models in applications such as seizure or tremor detection and motor decoding.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge