Classification Uncertainty of Deep Neural Networks Based on Gradient Information

Paper and Code

Jul 26, 2018

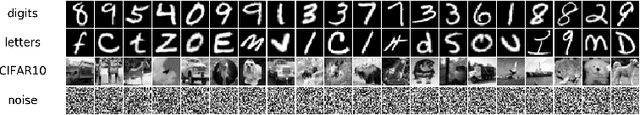

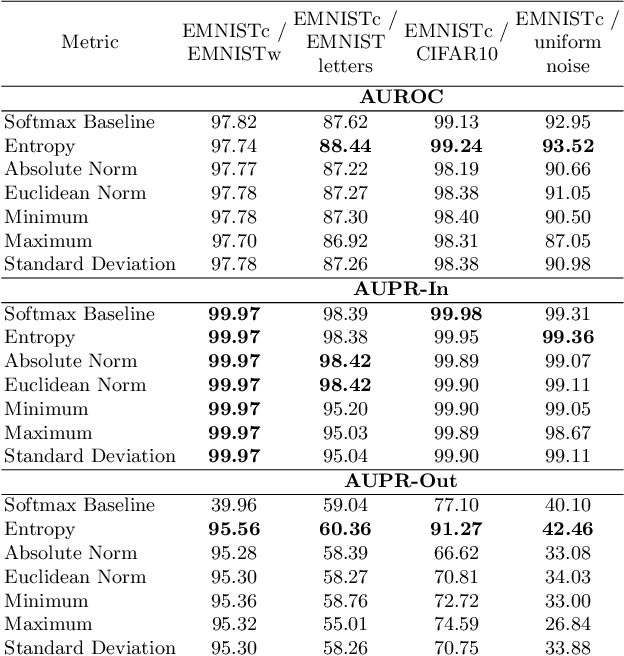

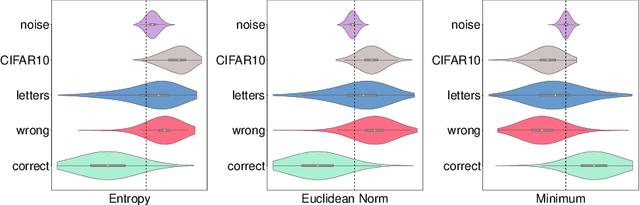

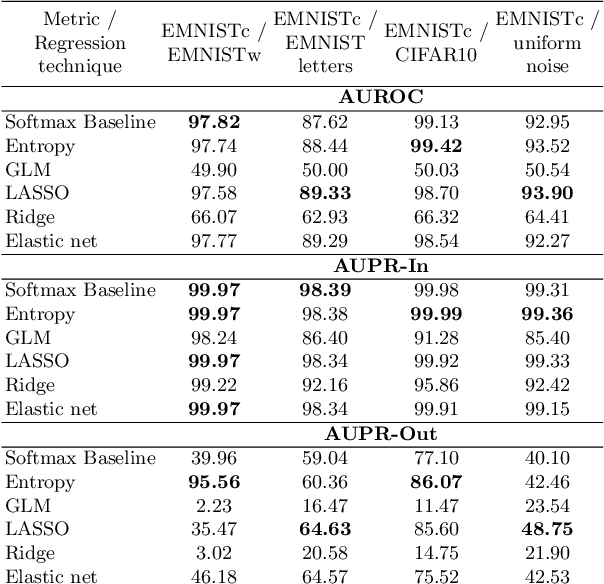

We study the quantification of uncertainty of Convolutional Neural Networks (CNNs) based on gradient metrics. Unlike the classical softmax entropy, such metrics gather information from all layers of the CNN. We show for the EMNIST digits data set that for several such metrics we achieve the same meta classification accuracy -- i.e. the task of classifying predictions as correct or incorrect without knowing the actual label -- as for entropy thresholding. We apply meta classification to unknown concepts (out-of-distribution samples) -- EMNIST/Omniglot letters, CIFAR10 and noise -- and demonstrate that meta classification rates for unknown concepts can be increased when using entropy together with several gradient based metrics as input quantities for a meta classifier. Meta classifiers only trained on the uncertainty metrics of known concepts, i.e. EMNIST digits, usually do not perform equally well for all unknown concepts. If we however allow the meta classifier to be trained on uncertainty metrics for some out-of-distribution samples, meta classification for concepts remote from EMNIST digits (then termed known unknowns) can be improved considerably.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge