Classification by Re-generation: Towards Classification Based on Variational Inference

Paper and Code

Sep 10, 2018

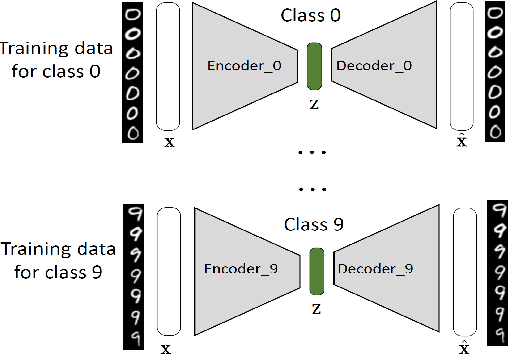

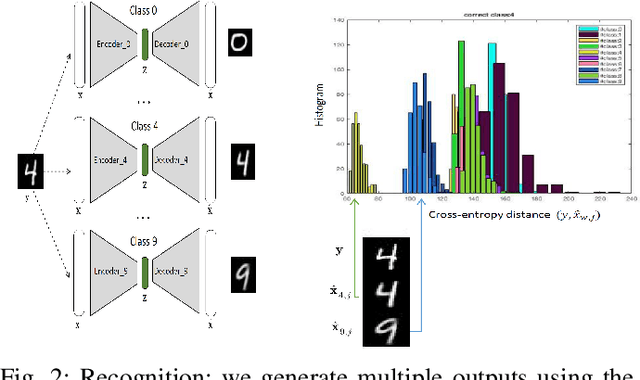

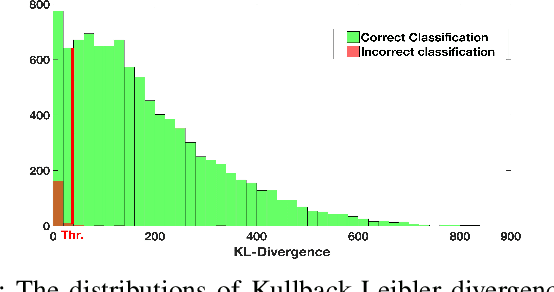

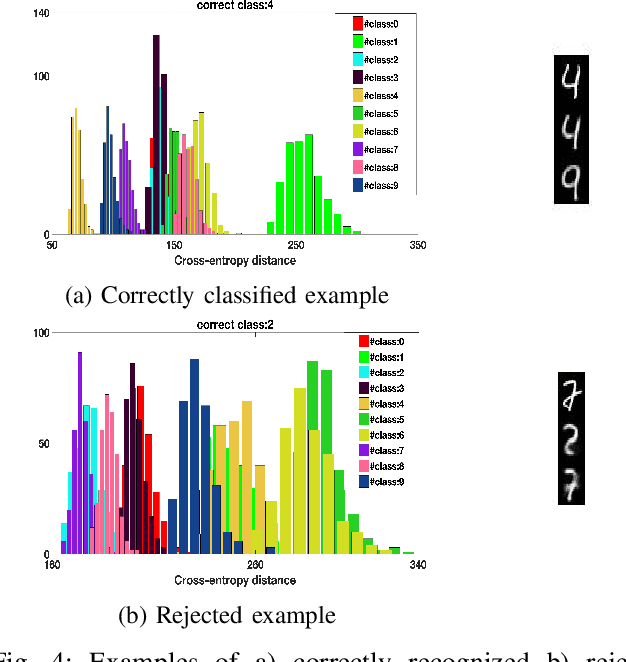

As Deep Neural Networks (DNNs) are considered the state-of-the-art in many classification tasks, the question of their semantic generalizations has been raised. To address semantic interpretability of learned features, we introduce a novel idea of classification by re-generation based on variational autoencoder (VAE) in which a separate encoder-decoder pair of VAE is trained for each class. Moreover, the proposed architecture overcomes the scalability issue in current DNN networks as there is no need to re-train the whole network with the addition of new classes and it can be done for each class separately. We also introduce a criterion based on Kullback-Leibler divergence to reject doubtful examples. This rejection criterion should improve the trust in the obtained results and can be further exploited to reject adversarial examples.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge