Class prior estimation for positive-unlabeled learning when label shift occurs

Paper and Code

Feb 28, 2025

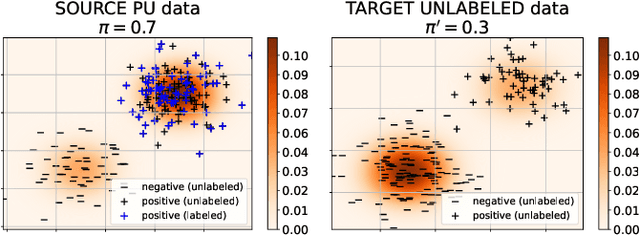

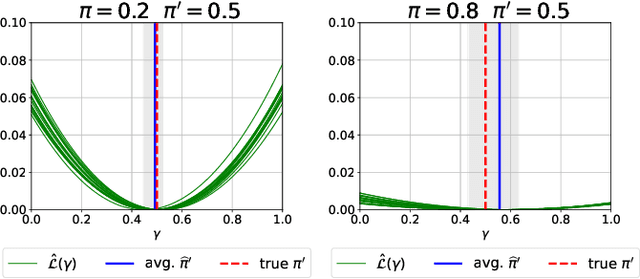

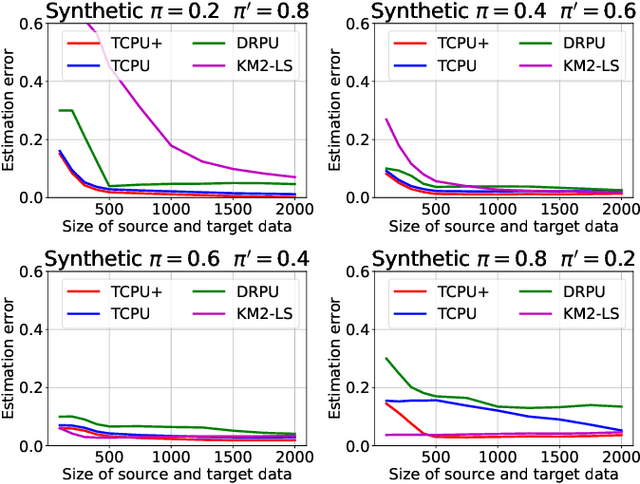

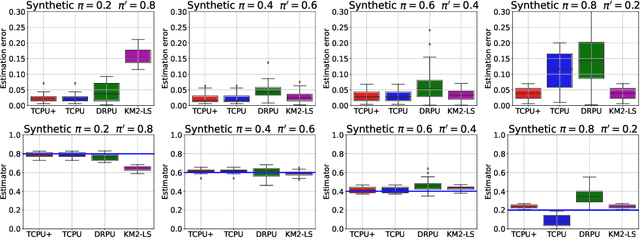

We study estimation of class prior for unlabeled target samples which is possibly different from that of source population. It is assumed that for the source data only samples from positive class and from the whole population are available (PU learning scenario). We introduce a novel direct estimator of class prior which avoids estimation of posterior probabilities and has a simple geometric interpretation. It is based on a distribution matching technique together with kernel embedding and is obtained as an explicit solution to an optimisation task. We establish its asymptotic consistency as well as a non-asymptotic bound on its deviation from the unknown prior, which is calculable in practice. We study finite sample behaviour for synthetic and real data and show that the proposal, together with a suitably modified version for large values of source prior, works on par or better than its competitors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge