CIDER: Exploiting Hyperspherical Embeddings for Out-of-Distribution Detection

Paper and Code

Mar 08, 2022

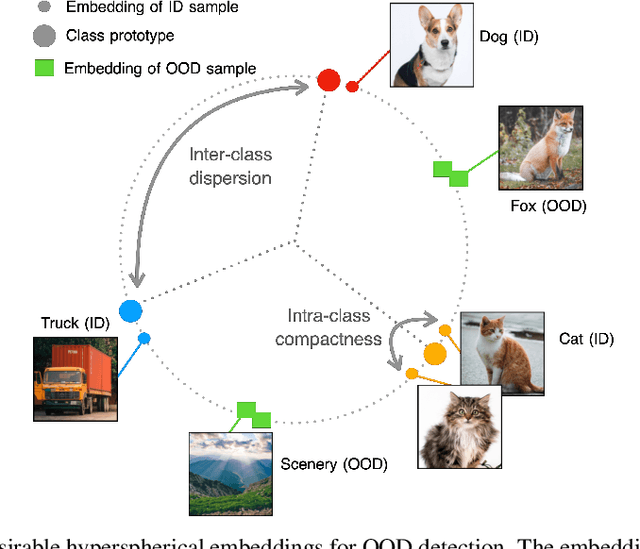

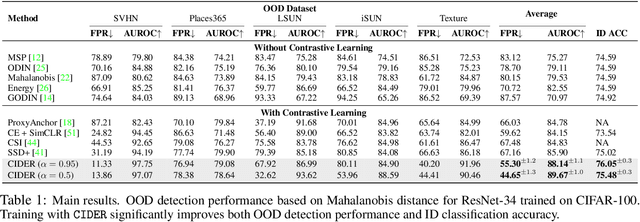

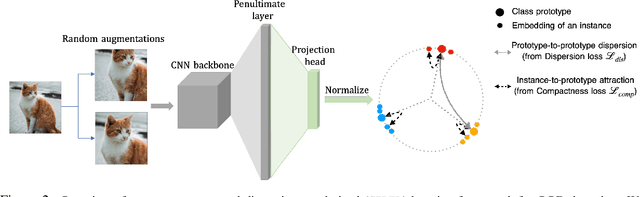

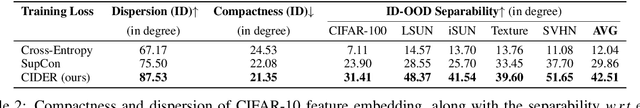

Out-of-distribution (OOD) detection is a critical task for reliable machine learning. Recent advances in representation learning give rise to developments in distance-based OOD detection, where testing samples are detected as OOD if they are relatively far away from the centroids or prototypes of in-distribution (ID) classes. However, prior methods directly take off-the-shelf loss functions that suffice for classifying ID samples, but are not optimally designed for OOD detection. In this paper, we propose CIDER, a simple and effective representation learning framework by exploiting hyperspherical embeddings for OOD detection. CIDER jointly optimizes two losses to promote strong ID-OOD separability: (1) a dispersion loss that promotes large angular distances among different class prototypes, and (2) a compactness loss that encourages samples to be close to their class prototypes. We show that CIDER is effective under various settings and establishes state-of-the-art performance. On a hard OOD detection task CIFAR-100 vs. CIFAR-10, our method substantially improves the AUROC by 14.20% compared to the embeddings learned by the cross-entropy loss.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge