Characterizing Datasets for Social Visual Question Answering, and the New TinySocial Dataset

Paper and Code

Oct 08, 2020

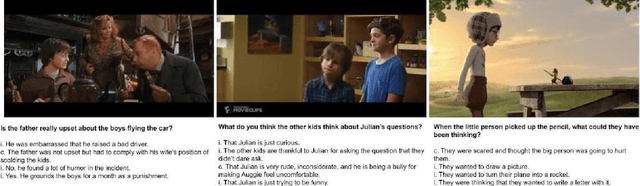

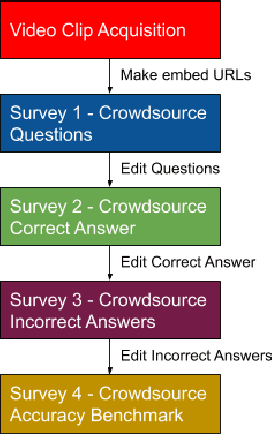

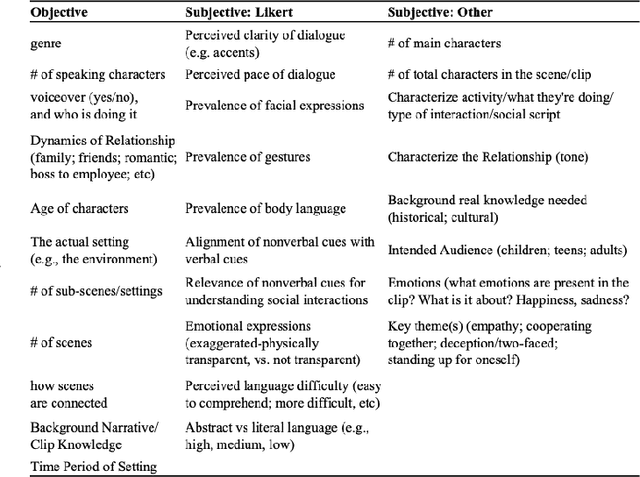

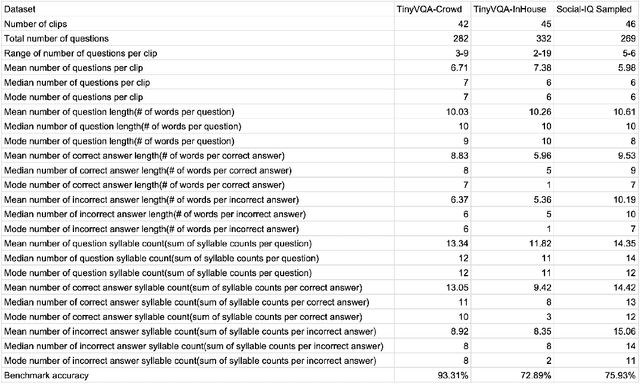

Modern social intelligence includes the ability to watch videos and answer questions about social and theory-of-mind-related content, e.g., for a scene in Harry Potter, "Is the father really upset about the boys flying the car?" Social visual question answering (social VQA) is emerging as a valuable methodology for studying social reasoning in both humans (e.g., children with autism) and AI agents. However, this problem space spans enormous variations in both videos and questions. We discuss methods for creating and characterizing social VQA datasets, including 1) crowdsourcing versus in-house authoring, including sample comparisons of two new datasets that we created (TinySocial-Crowd and TinySocial-InHouse) and the previously existing Social-IQ dataset; 2) a new rubric for characterizing the difficulty and content of a given video; and 3) a new rubric for characterizing question types. We close by describing how having well-characterized social VQA datasets will enhance the explainability of AI agents and can also inform assessments and educational interventions for people.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge