Characterizing and Taming Resolution in Convolutional Neural Networks

Paper and Code

Oct 28, 2021

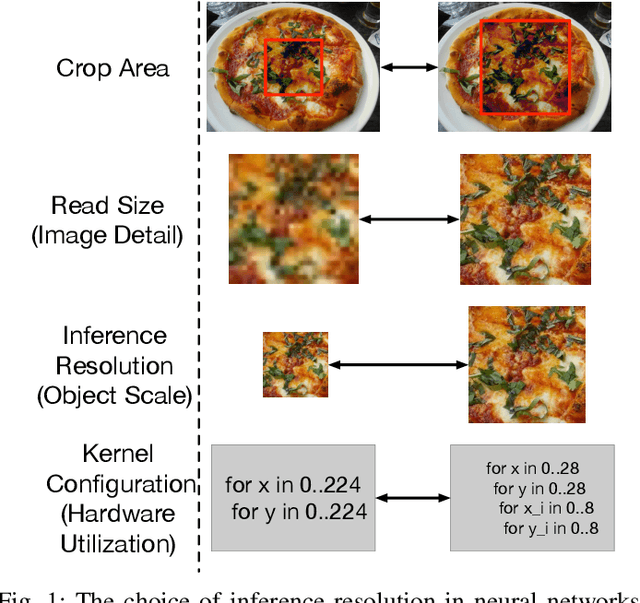

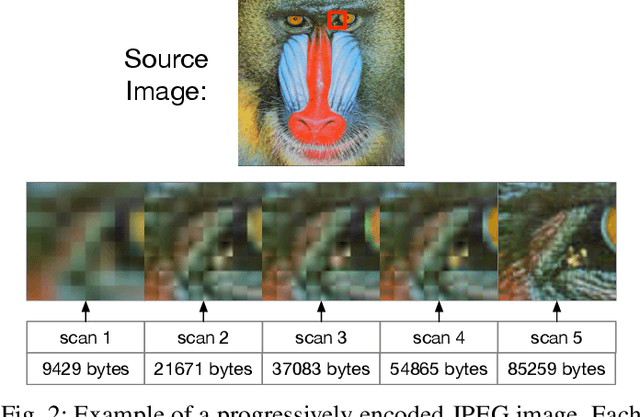

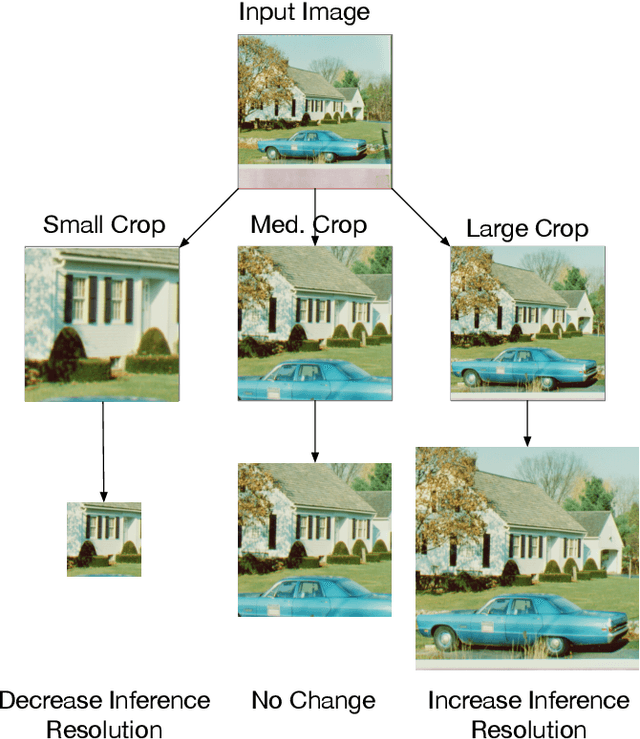

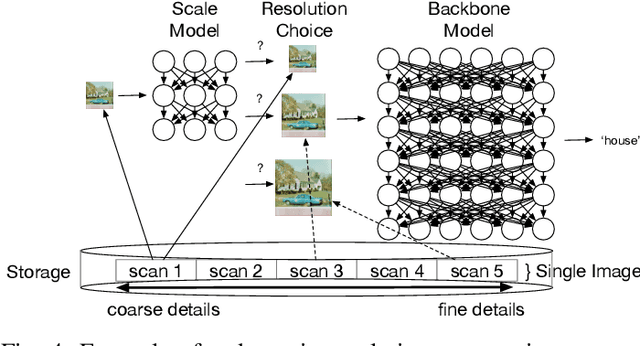

Image resolution has a significant effect on the accuracy and computational, storage, and bandwidth costs of computer vision model inference. These costs are exacerbated when scaling out models to large inference serving systems and make image resolution an attractive target for optimization. However, the choice of resolution inherently introduces additional tightly coupled choices, such as image crop size, image detail, and compute kernel implementation that impact computational, storage, and bandwidth costs. Further complicating this setting, the optimal choices from the perspective of these metrics are highly dependent on the dataset and problem scenario. We characterize this tradeoff space, quantitatively studying the accuracy and efficiency tradeoff via systematic and automated tuning of image resolution, image quality and convolutional neural network operators. With the insights from this study, we propose a dynamic resolution mechanism that removes the need to statically choose a resolution ahead of time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge