CF2-Net: Coarse-to-Fine Fusion Convolutional Network for Breast Ultrasound Image Segmentation

Paper and Code

Mar 23, 2020

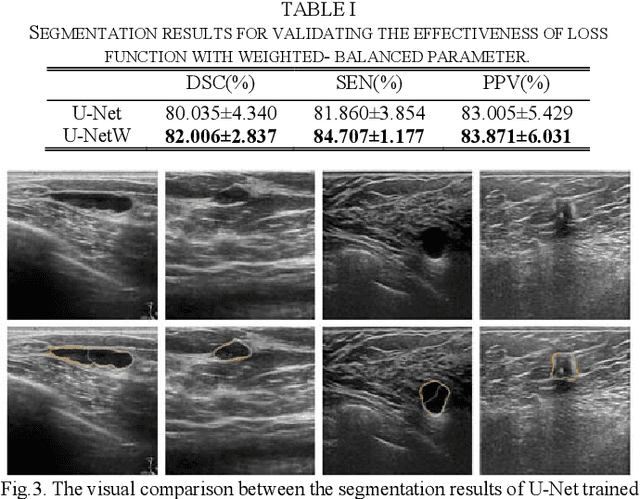

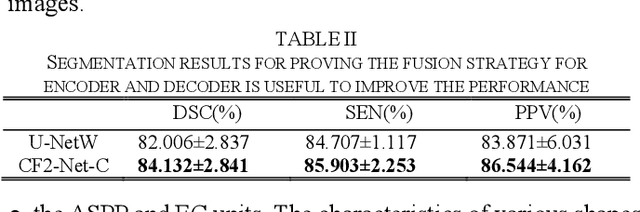

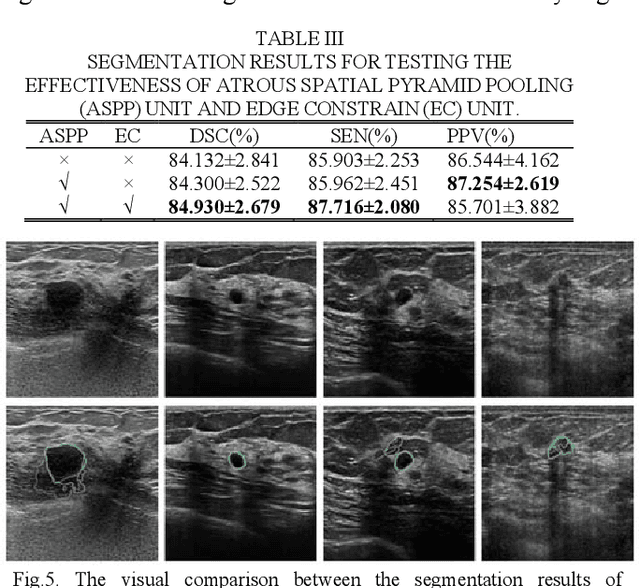

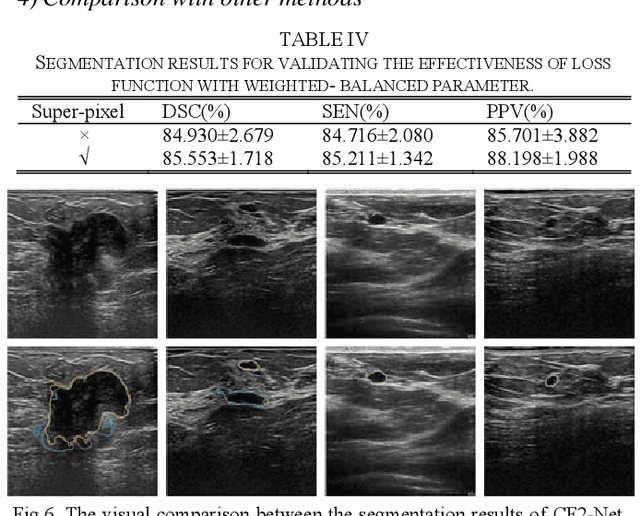

Breast ultrasound (BUS) image segmentation plays a crucial role in a computer-aided diagnosis system, which is regarded as a useful tool to help increase the accuracy of breast cancer diagnosis. Recently, many deep learning methods have been developed for segmentation of BUS image and show some advantages compared with conventional region-, model-, and traditional learning-based methods. However, previous deep learning methods typically use skip-connection to concatenate the encoder and decoder, which might not make full fusion of coarse-to-fine features from encoder and decoder. Since the structure and edge of lesion in BUS image are common blurred, these would make it difficult to learn the discriminant information of structure and edge, and reduce the performance. To this end, we propose and evaluate a coarse-to-fine fusion convolutional network (CF2-Net) based on a novel feature integration strategy (forming an 'E'-like type) for BUS image segmentation. To enhance contour and provide structural information, we concatenate a super-pixel image and the original image as the input of CF2-Net. Meanwhile, to highlight the differences in the lesion regions with variable sizes and relieve the imbalance issue, we further design a weighted-balanced loss function to train the CF2-Net effectively. The proposed CF2-Net was evaluated on an open dataset by using four-fold cross validation. The results of the experiment demonstrate that the CF2-Net obtains state-of-the-art performance when compared with other deep learning-based methods

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge