CCSI: Continual Class-Specific Impression for Data-free Class Incremental Learning

Paper and Code

Jun 09, 2024

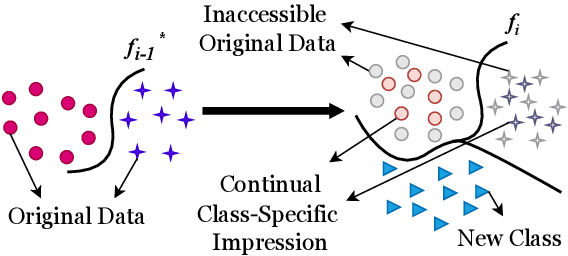

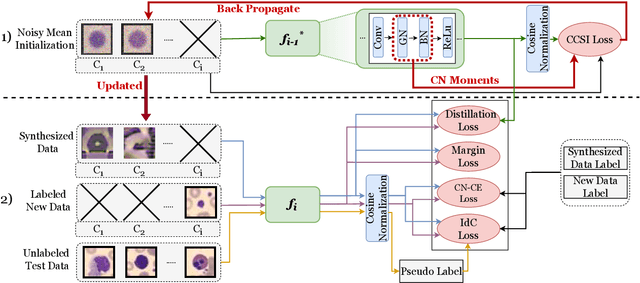

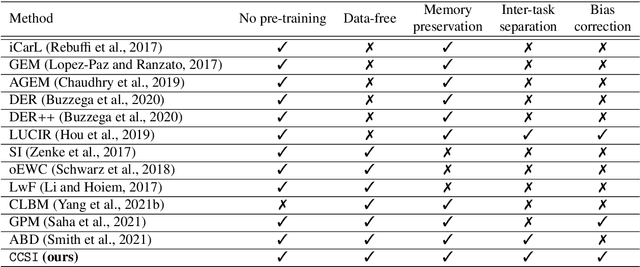

In real-world clinical settings, traditional deep learning-based classification methods struggle with diagnosing newly introduced disease types because they require samples from all disease classes for offline training. Class incremental learning offers a promising solution by adapting a deep network trained on specific disease classes to handle new diseases. However, catastrophic forgetting occurs, decreasing the performance of earlier classes when adapting the model to new data. Prior proposed methodologies to overcome this require perpetual storage of previous samples, posing potential practical concerns regarding privacy and storage regulations in healthcare. To this end, we propose a novel data-free class incremental learning framework that utilizes data synthesis on learned classes instead of data storage from previous classes. Our key contributions include acquiring synthetic data known as Continual Class-Specific Impression (CCSI) for previously inaccessible trained classes and presenting a methodology to effectively utilize this data for updating networks when introducing new classes. We obtain CCSI by employing data inversion over gradients of the trained classification model on previous classes starting from the mean image of each class inspired by common landmarks shared among medical images and utilizing continual normalization layers statistics as a regularizer in this pixel-wise optimization process. Subsequently, we update the network by combining the synthesized data with new class data and incorporate several losses, including an intra-domain contrastive loss to generalize the deep network trained on the synthesized data to real data, a margin loss to increase separation among previous classes and new ones, and a cosine-normalized cross-entropy loss to alleviate the adverse effects of imbalanced distributions in training data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge