Categorical Keypoint Positional Embedding for Robust Animal Re-Identification

Paper and Code

Dec 01, 2024

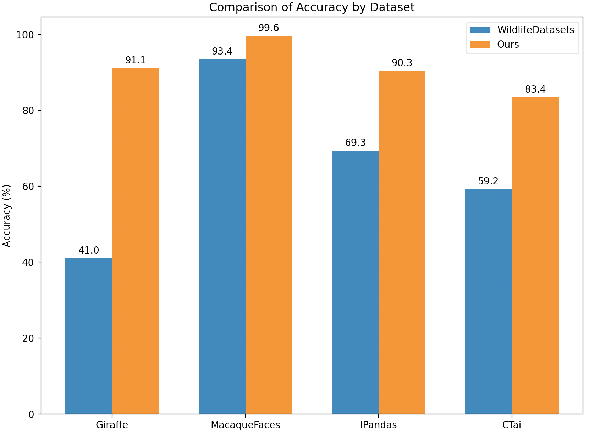

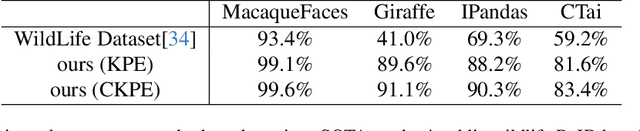

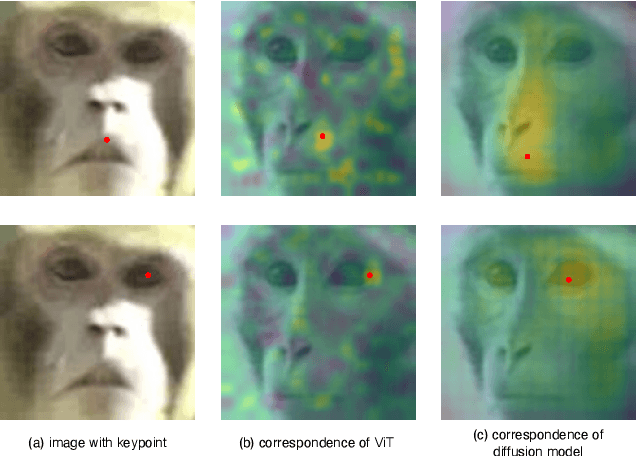

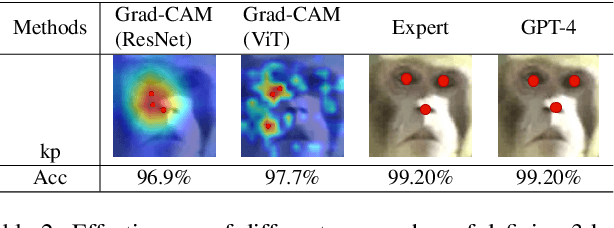

Animal re-identification (ReID) has become an indispensable tool in ecological research, playing a critical role in tracking population dynamics, analyzing behavioral patterns, and assessing ecological impacts, all of which are vital for informed conservation strategies. Unlike human ReID, animal ReID faces significant challenges due to the high variability in animal poses, diverse environmental conditions, and the inability to directly apply pre-trained models to animal data, making the identification process across species more complex. This work introduces an innovative keypoint propagation mechanism, which utilizes a single annotated image and a pre-trained diffusion model to propagate keypoints across an entire dataset, significantly reducing the cost of manual annotation. Additionally, we enhance the Vision Transformer (ViT) by implementing Keypoint Positional Encoding (KPE) and Categorical Keypoint Positional Embedding (CKPE), enabling the ViT to learn more robust and semantically-aware representations. This provides more comprehensive and detailed keypoint representations, leading to more accurate and efficient re-identification. Our extensive experimental evaluations demonstrate that this approach significantly outperforms existing state-of-the-art methods across four wildlife datasets. The code will be publicly released.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge