Can uncertainty boost the reliability of AI-based diagnostic methods in digital pathology?

Paper and Code

Dec 17, 2021

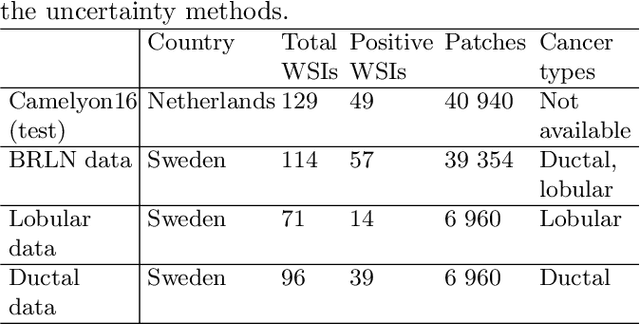

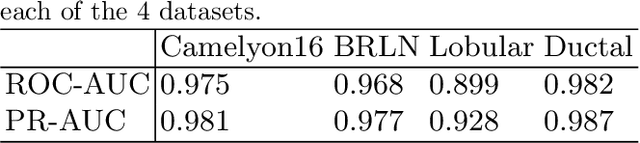

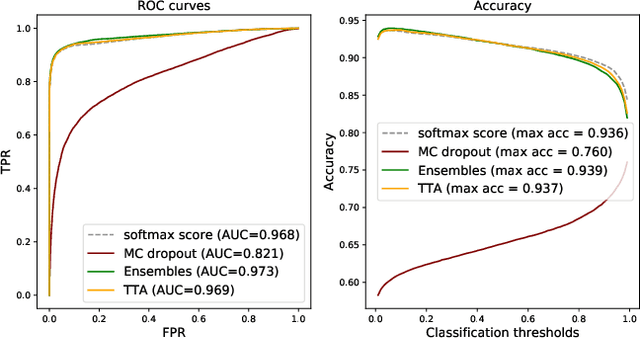

Deep learning (DL) has shown great potential in digital pathology applications. The robustness of a diagnostic DL-based solution is essential for safe clinical deployment. In this work we evaluate if adding uncertainty estimates for DL predictions in digital pathology could result in increased value for the clinical applications, by boosting the general predictive performance or by detecting mispredictions. We compare the effectiveness of model-integrated methods (MC dropout and Deep ensembles) with a model-agnostic approach (Test time augmentation, TTA). Moreover, four uncertainty metrics are compared. Our experiments focus on two domain shift scenarios: a shift to a different medical center and to an underrepresented subtype of cancer. Our results show that uncertainty estimates can add some reliability and reduce sensitivity to classification threshold selection. While advanced metrics and deep ensembles perform best in our comparison, the added value over simpler metrics and TTA is small. Importantly, the benefit of all evaluated uncertainty estimation methods is diminished by domain shift.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge