Can I Still Trust You?: Understanding the Impact of Distribution Shifts on Algorithmic Recourses

Paper and Code

Dec 22, 2020

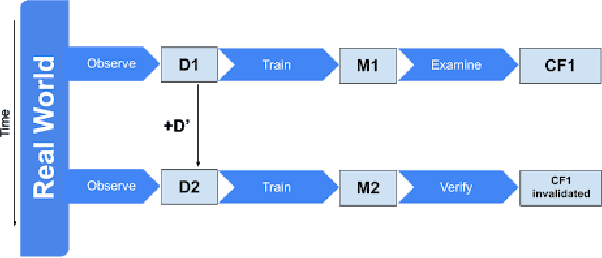

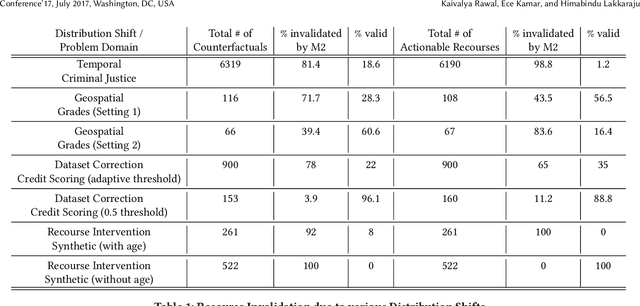

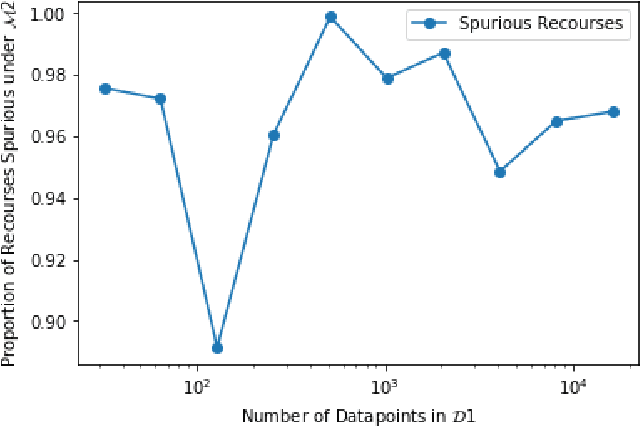

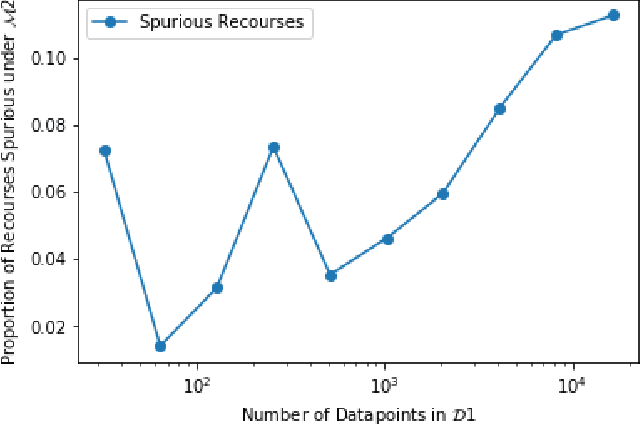

As predictive models are being increasingly deployed to make a variety of consequential decisions ranging from hiring decisions to loan approvals, there is growing emphasis on designing algorithms that can provide reliable recourses to affected individuals. To this end, several recourse generation algorithms have been proposed in recent literature. However, there is little to no work on systematically assessing if these algorithms are actually generating recourses that are reliable. In this work, we assess the reliability of algorithmic recourses through the lens of distribution shifts i.e., we empirically and theoretically study if and what kind of recourses generated by state-of-the-art algorithms are robust to distribution shifts. To the best of our knowledge, this work makes the first attempt at addressing this critical question. We experiment with multiple synthetic and real world datasets capturing different kinds of distribution shifts including temporal shifts, geospatial shifts, and shifts due to data corrections. Our results demonstrate that all the aforementioned distribution shifts could potentially invalidate the recourses generated by state-of-the-art algorithms. In addition, we also find that recourse interventions themselves may cause distribution shifts which in turn invalidate previously prescribed recourses. Our theoretical results establish that the recourses (counterfactuals) that are close to the model decision boundary are more likely to be invalidated upon model updation. However, state-of-the-art algorithms tend to prefer exactly these recourses because their cost functions penalize recourses (counterfactuals) that require large modifications to the original instance. Our findings not only expose fundamental flaws in recourse finding strategies but also pave new way for rethinking the design and development of recourse generation algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge