Can collaborative learning be private, robust and scalable?

Paper and Code

May 05, 2022

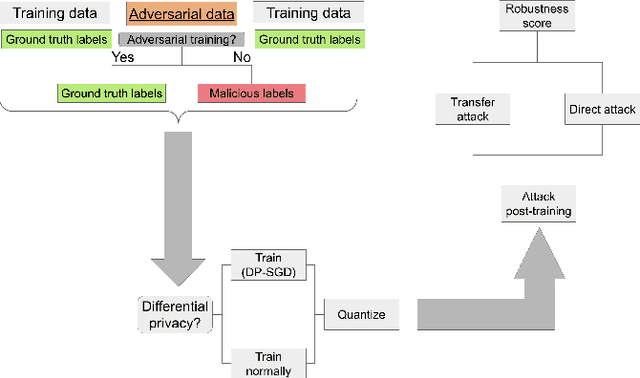

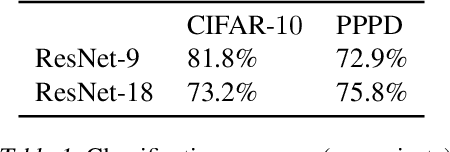

We investigate the effectiveness of combining differential privacy, model compression and adversarial training to improve the robustness of models against adversarial samples in train- and inference-time attacks. We explore the applications of these techniques as well as their combinations to determine which method performs best, without a significant utility trade-off. Our investigation provides a practical overview of various methods that allow one to achieve a competitive model performance, a significant reduction in model's size and an improved empirical adversarial robustness without a severe performance degradation.

* Submitted to TPDP 2022

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge