CAFA: Class-Aware Feature Alignment for Test-Time Adaptation

Paper and Code

Jun 01, 2022

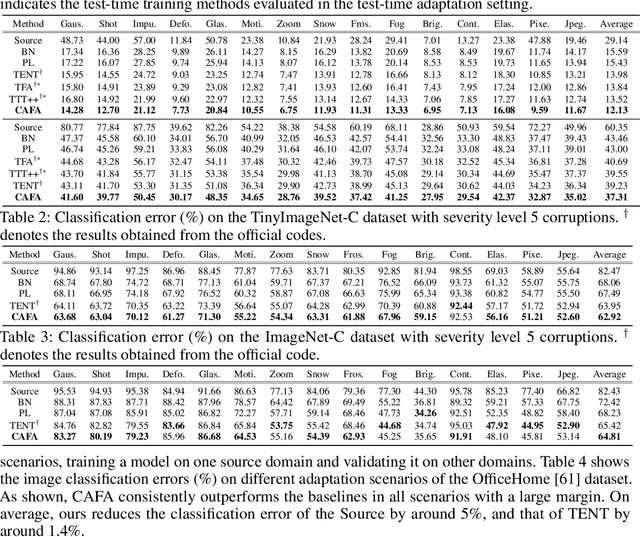

Despite recent advancements in deep learning, deep networks still suffer from performance degradation when they face new and different data from their training distributions. Addressing such a problem, test-time adaptation (TTA) aims to adapt a model to unlabeled test data on test time while making predictions simultaneously. TTA applies to pretrained networks without modifying their training procedures, which enables to utilize the already well-formed source distribution for adaptation. One possible approach is to align the representation space of test samples to the source distribution (\textit{i.e.,} feature alignment). However, performing feature alignments in TTA is especially challenging in that the access to labeled source data is restricted during adaptation. That is, a model does not have a chance to learn test data in a class-discriminative manner, which was feasible in other adaptation tasks (\textit{e.g.,} unsupervised domain adaptation) via supervised loss on the source data. Based on such an observation, this paper proposes \emph{a simple yet effective} feature alignment loss, termed as Class-Aware Feature Alignment (CAFA), which 1) encourages a model to learn target representations in a class-discriminative manner and 2) effectively mitigates the distribution shifts in test time, simultaneously. Our method does not require any hyper-parameters or additional losses, which are required in the previous approaches. We conduct extensive experiments and show our proposed method consistently outperforms existing baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge