Building Rule Hierarchies for Efficient Logical Rule Learning from Knowledge Graphs

Paper and Code

Jun 30, 2020

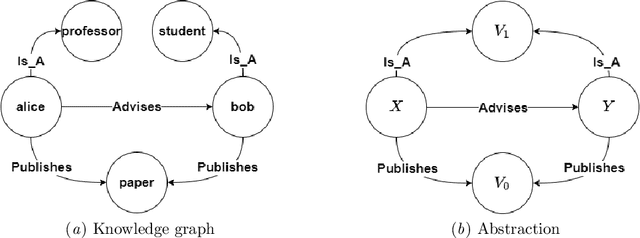

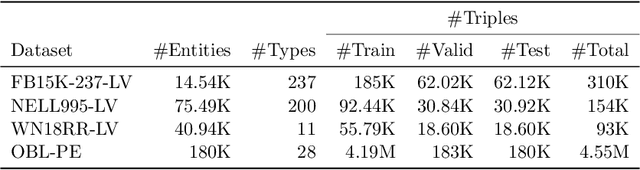

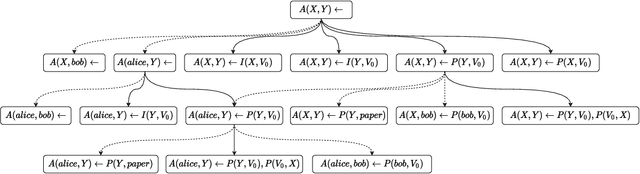

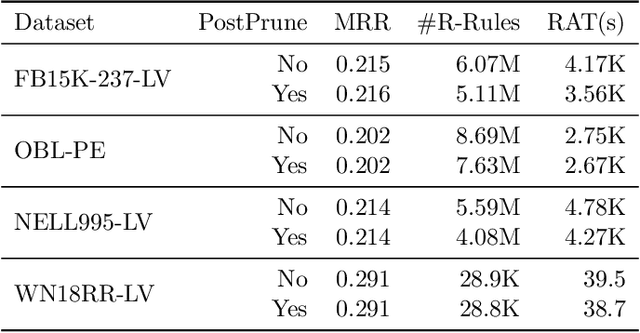

Many systems have been developed in recent years to mine logical rules from large-scale Knowledge Graphs (KGs), on the grounds that representing regularities as rules enables both the interpretable inference of new facts, and the explanation of known facts. Among these systems, the walk-based methods that generate the instantiated rules containing constants by abstracting sampled paths in KGs demonstrate strong predictive performance and expressivity. However, due to the large volume of possible rules, these systems do not scale well where computational resources are often wasted on generating and evaluating unpromising rules. In this work, we address such scalability issues by proposing new methods for pruning unpromising rules using rule hierarchies. The approach consists of two phases. Firstly, since rule hierarchies are not readily available in walk-based methods, we have built a Rule Hierarchy Framework (RHF), which leverages a collection of subsumption frameworks to build a proper rule hierarchy from a set of learned rules. And secondly, we adapt RHF to an existing rule learner where we design and implement two methods for Hierarchical Pruning (HPMs), which utilize the generated hierarchies to remove irrelevant and redundant rules. Through experiments over four public benchmark datasets, we show that the application of HPMs is effective in removing unpromising rules, which leads to significant reductions in the runtime as well as in the number of learned rules, without compromising the predictive performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge