Building a Chaotic Proved Neural Network

Paper and Code

Jan 23, 2011

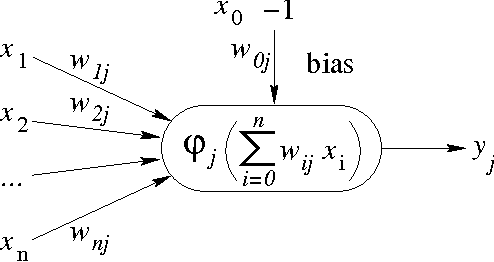

Chaotic neural networks have received a great deal of attention these last years. In this paper we establish a precise correspondence between the so-called chaotic iterations and a particular class of artificial neural networks: global recurrent multi-layer perceptrons. We show formally that it is possible to make these iterations behave chaotically, as defined by Devaney, and thus we obtain the first neural networks proven chaotic. Several neural networks with different architectures are trained to exhibit a chaotical behavior.

* 6 pages, submitted to ICCANS 2011

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge