Bridging the gap between regret minimization and best arm identification, with application to A/B tests

Paper and Code

Oct 09, 2018

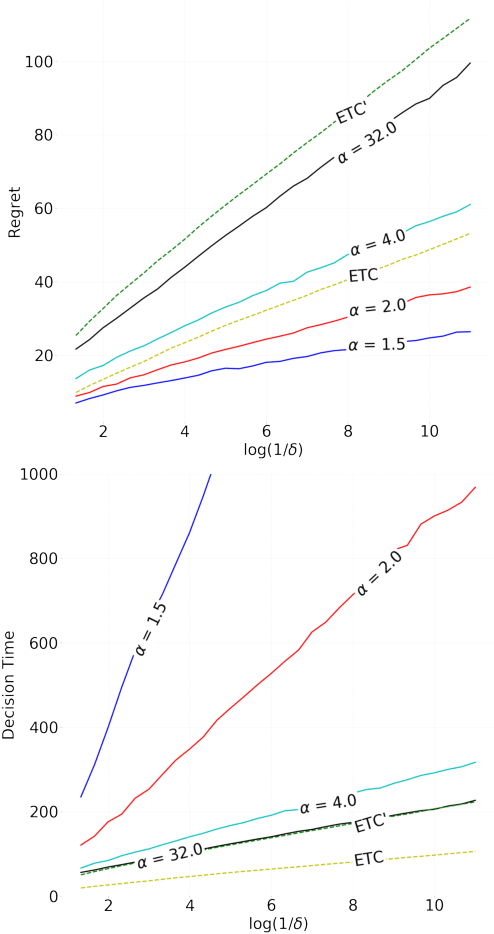

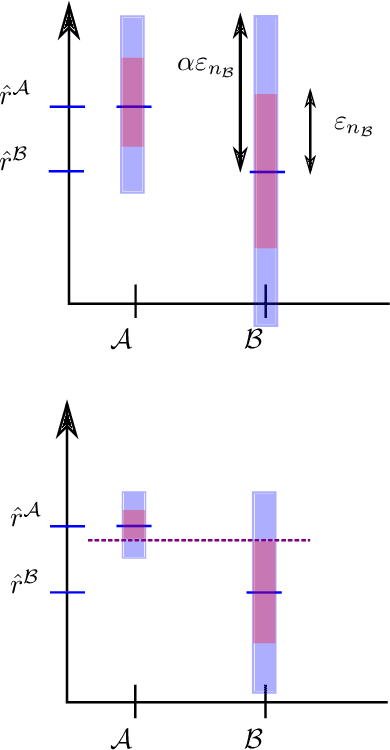

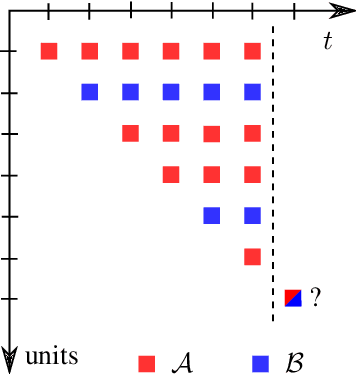

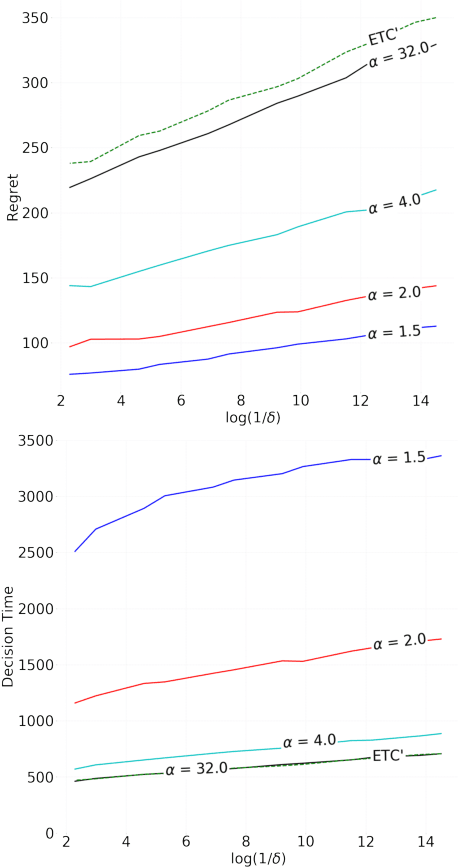

State of the art online learning procedures focus either on selecting the best alternative ("best arm identification") or on minimizing the cost (the "regret"). We merge these two objectives by providing the theoretical analysis of cost minimizing algorithms that are also delta-PAC (with a proven guaranteed bound on the decision time), hence fulfilling at the same time regret minimization and best arm identification. This analysis sheds light on the common observation that ill-callibrated UCB-algorithms minimize regret while still identifying quickly the best arm. We also extend these results to the non-iid case faced by many practitioners. This provides a technique to make cost versus decision time compromise when doing adaptive tests with applications ranging from website A/B testing to clinical trials.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge