Bridging Dimensions: Confident Reachability for High-Dimensional Controllers

Paper and Code

Nov 08, 2023

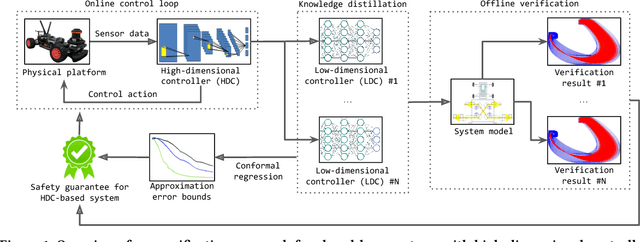

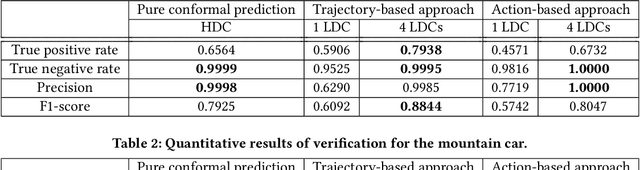

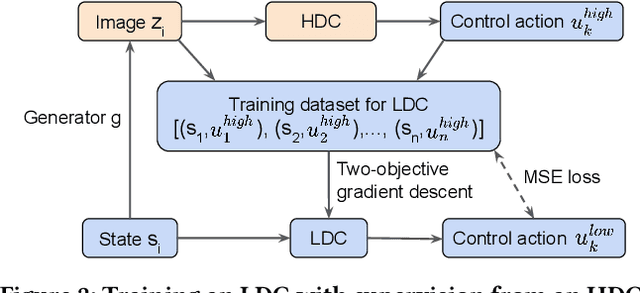

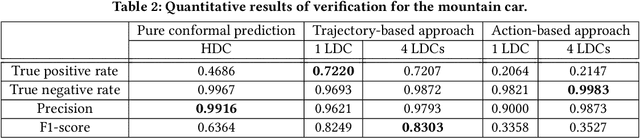

Autonomous systems are increasingly implemented using end-end-end trained controllers. Such controllers make decisions that are executed on the real system with images as one of the primary sensing modalities. Deep neural networks form a fundamental building block of such controllers. Unfortunately, the existing neural-network verification tools do not scale to inputs with thousands of dimensions. Especially when the individual inputs (such as pixels) are devoid of clear physical meaning. This paper takes a step towards connecting exhaustive closed-loop verification with high-dimensional controllers. Our key insight is that the behavior of a high-dimensional controller can be approximated with several low-dimensional controllers in different regions of the state space. To balance approximation and verifiability, we leverage the latest verification-aware knowledge distillation. Then, if low-dimensional reachability results are inflated with statistical approximation errors, they yield a high-confidence reachability guarantee for the high-dimensional controller. We investigate two inflation techniques -- based on trajectories and actions -- both of which show convincing performance in two OpenAI gym benchmarks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge