BriarPatches: Pixel-Space Interventions for Inducing Demographic Parity

Paper and Code

Dec 17, 2018

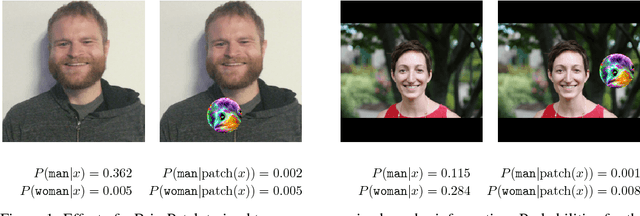

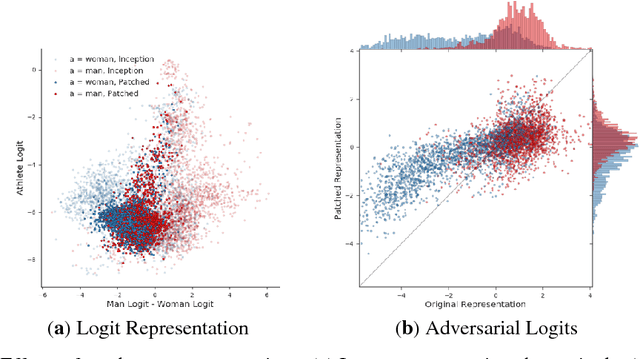

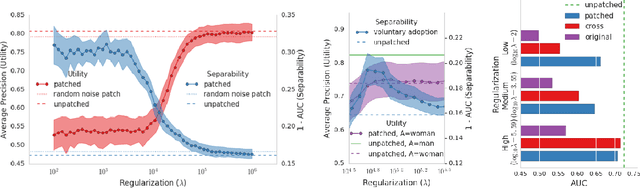

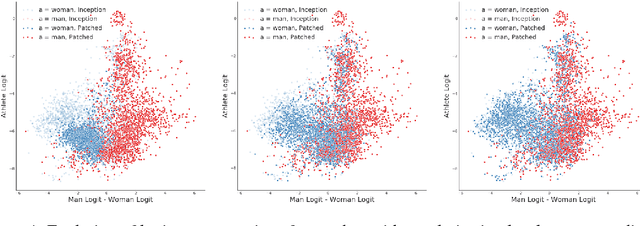

We introduce the BriarPatch, a pixel-space intervention that obscures sensitive attributes from representations encoded in pre-trained classifiers. The patches encourage internal model representations not to encode sensitive information, which has the effect of pushing downstream predictors towards exhibiting demographic parity with respect to the sensitive information. The net result is that these BriarPatches provide an intervention mechanism available at user level, and complements prior research on fair representations that were previously only applicable by model developers and ML experts.

* 6 pages, 5 figures, NeurIPS Workshop on Ethical, Social and

Governance Issues in AI

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge