Bounding probabilities of causation through the causal marginal problem

Paper and Code

Apr 04, 2023

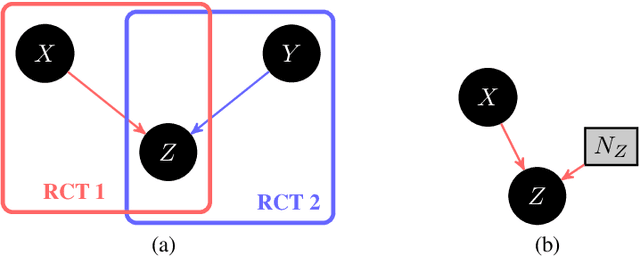

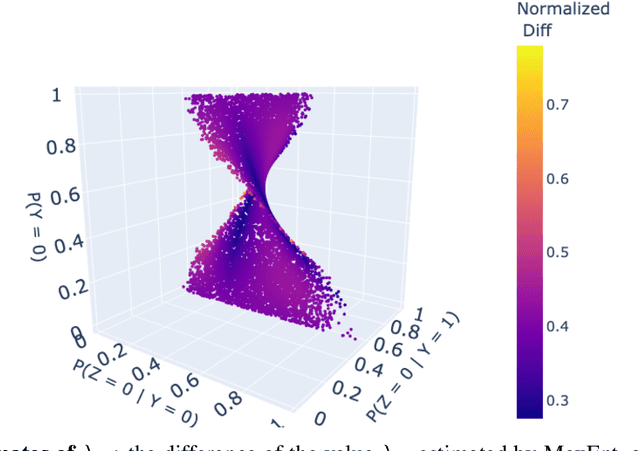

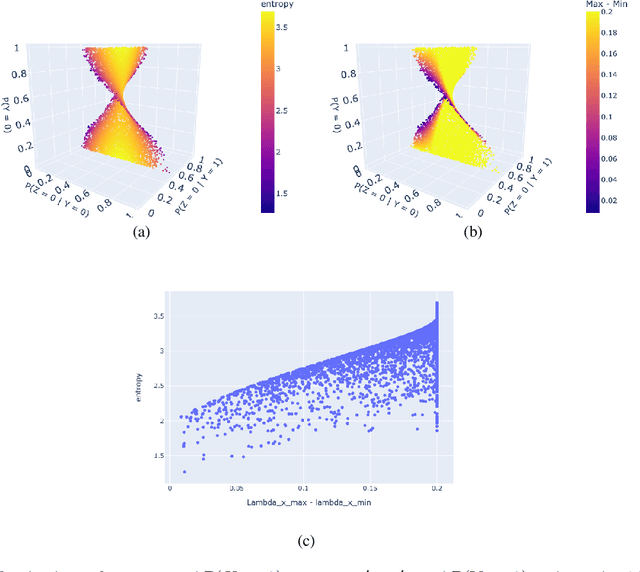

Probabilities of Causation play a fundamental role in decision making in law, health care and public policy. Nevertheless, their point identification is challenging, requiring strong assumptions such as monotonicity. In the absence of such assumptions, existing work requires multiple observations of datasets that contain the same treatment and outcome variables, in order to establish bounds on these probabilities. However, in many clinical trials and public policy evaluation cases, there exist independent datasets that examine the effect of a different treatment each on the same outcome variable. Here, we outline how to significantly tighten existing bounds on the probabilities of causation, by imposing counterfactual consistency between SCMs constructed from such independent datasets ('causal marginal problem'). Next, we describe a new information theoretic approach on falsification of counterfactual probabilities, using conditional mutual information to quantify counterfactual influence. The latter generalises to arbitrary discrete variables and number of treatments, and renders the causal marginal problem more interpretable. Since the question of 'tight enough' is left to the user, we provide an additional method of inference when the bounds are unsatisfactory: A maximum entropy based method that defines a metric for the space of plausible SCMs and proposes the entropy maximising SCM for inferring counterfactuals in the absence of more information.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge