BiSTF: Bilateral-Branch Self-Training Framework for Semi-Supervised Large-scale Fine-Grained Recognition

Paper and Code

Jul 14, 2021

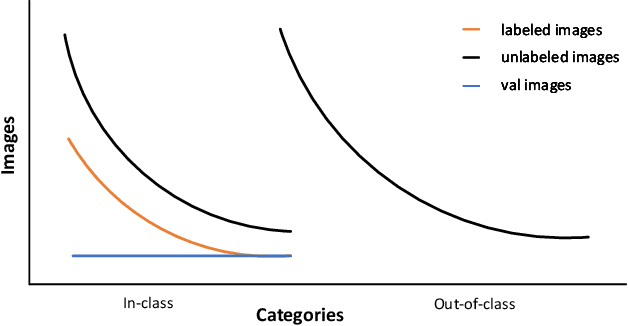

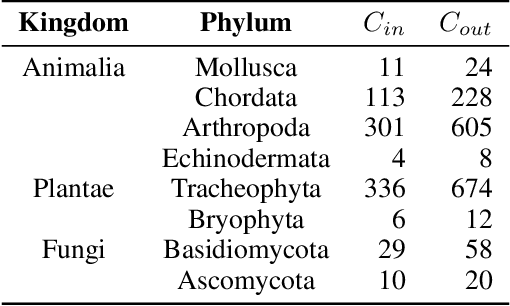

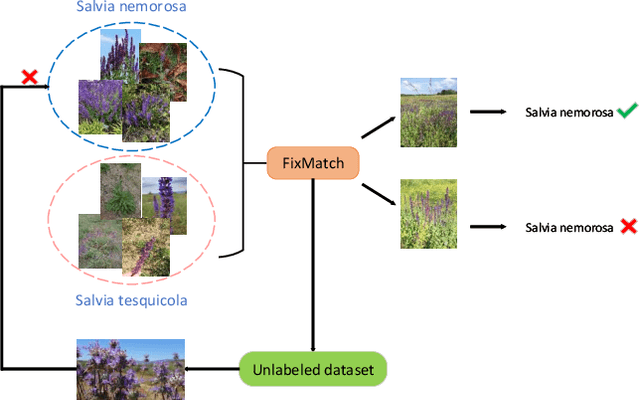

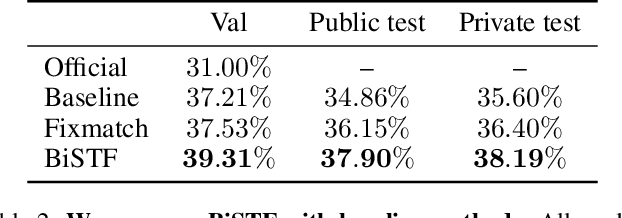

Semi-supervised Fine-Grained Recognition is a challenge task due to the difficulty of data imbalance, high inter-class similarity and domain mismatch. Recent years, this field has witnessed great progress and many methods has gained great performance. However, these methods can hardly generalize to the large-scale datasets, such as Semi-iNat, as they are prone to suffer from noise in unlabeled data and the incompetence for learning features from imbalanced fine-grained data. In this work, we propose Bilateral-Branch Self-Training Framework (BiSTF), a simple yet effective framework to improve existing semi-supervised learning methods on class-imbalanced and domain-shifted fine-grained data. By adjusting the update frequency through stochastic epoch update, BiSTF iteratively retrains a baseline SSL model with a labeled set expanded by selectively adding pseudo-labeled samples from an unlabeled set, where the distribution of pseudo-labeled samples are the same as the labeled data. We show that BiSTF outperforms the existing state-of-the-art SSL algorithm on Semi-iNat dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge