Best Arm Identification for Contaminated Bandits

Paper and Code

Oct 19, 2018

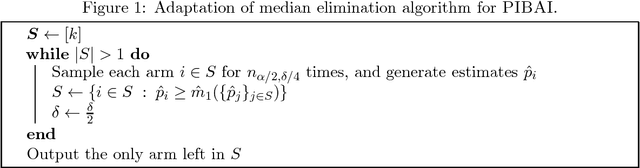

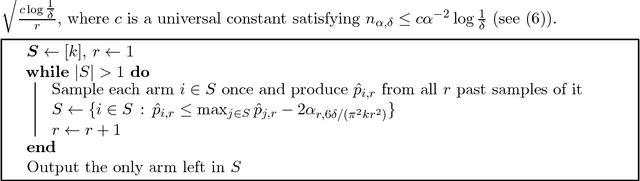

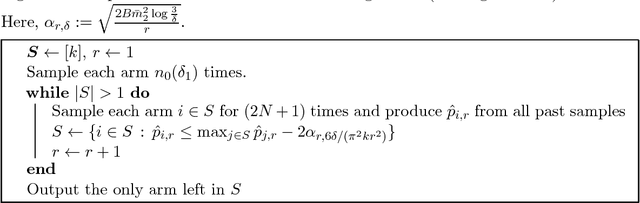

This paper studies active learning in the context of robust statistics. Specifically, we propose a variant of the Best Arm Identification problem for \emph{contaminated bandits}, where each arm pull has probability $\varepsilon$ of generating a sample from an arbitrary contamination distribution instead of the true underlying distribution. The goal is to identify the best (or approximately best) true distribution with high probability, with a secondary goal of providing guarantees on the quality of this distribution. The primary challenge of the contaminated bandit setting is that the true distributions are only partially identifiable, even with infinite samples. To address this, we first develop tight, non-asymptotic sample complexity bounds for high-probability estimation of the first two robust moments (median and median absolute deviation) from contaminated samples, which may be of independent interest. Using these results, we adapt several classical Best Arm Identification algorithms to the contaminated bandit setting and derive sample complexity upper bounds for our problem. Finally, we provide matching information-theoretic lower bounds on the sample complexity (up to a small logarithmic factor). Our results suggest an inherent robustness of classical Best Arm Identification algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge