Beneficial Perturbation Network for designing general adaptive artificial intelligence systems

Paper and Code

Sep 27, 2020

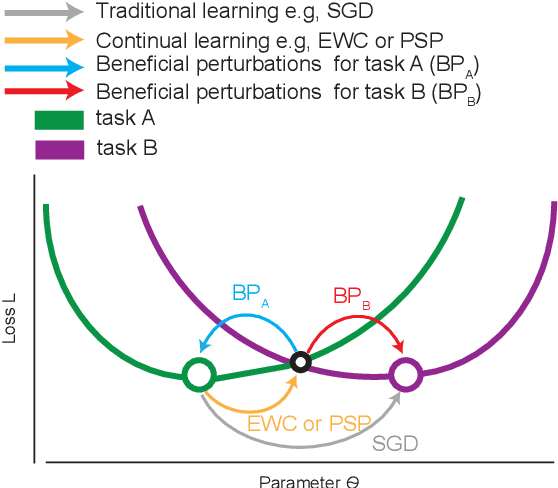

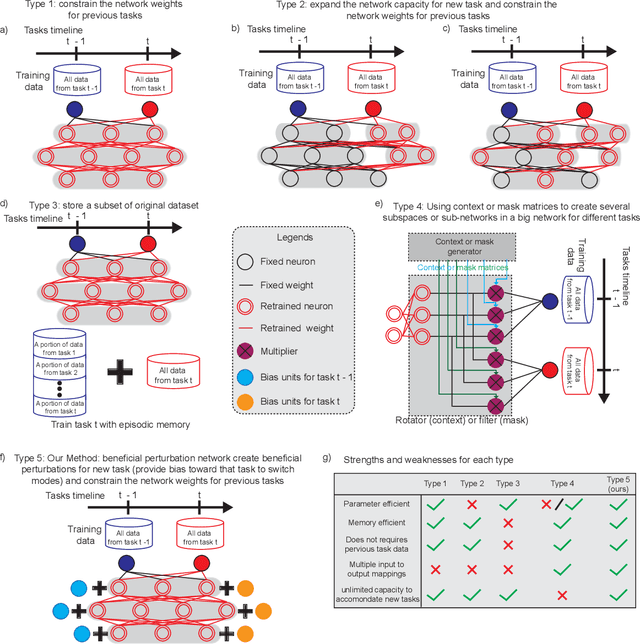

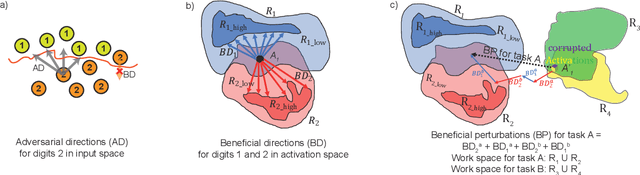

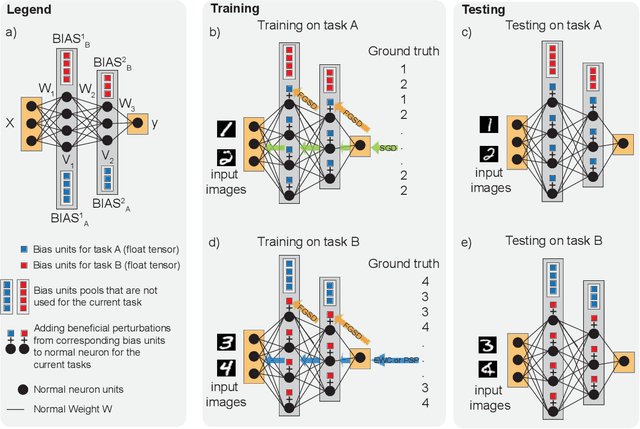

The human brain is the gold standard of adaptive learning. It not only can learn and benefit from experience, but also can adapt to new situations. In contrast, deep neural networks only learn one sophisticated but fixed mapping from inputs to outputs. This limits their applicability to more dynamic situations, where input to output mapping may change with different contexts. A salient example is continual learning - learning new independent tasks sequentially without forgetting previous tasks. Continual learning of multiple tasks in artificial neural networks using gradient descent leads to catastrophic forgetting, whereby a previously learned mapping of an old task is erased when learning new mappings for new tasks. Here, we propose a new biologically plausible type of deep neural network with extra, out-of-network, task-dependent biasing units to accommodate these dynamic situations. This allows, for the first time, a single network to learn potentially unlimited parallel input to output mappings, and to switch on the fly between them at runtime. Biasing units are programmed by leveraging beneficial perturbations (opposite to well-known adversarial perturbations) for each task. Beneficial perturbations for a given task bias the network toward that task, essentially switching the network into a different mode to process that task. This largely eliminates catastrophic interference between tasks. Our approach is memory-efficient and parameter-efficient, can accommodate many tasks, and achieves state-of-the-art performance across different tasks and domains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge