Benchmarking Pedestrian Odometry: The Brown Pedestrian Odometry Dataset (BPOD)

Paper and Code

Dec 24, 2021

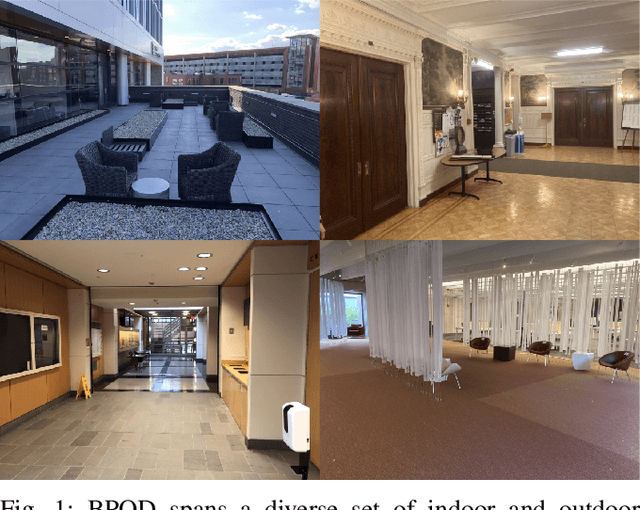

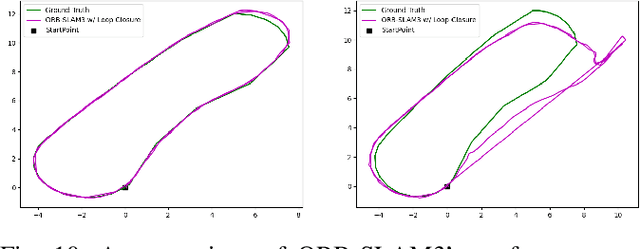

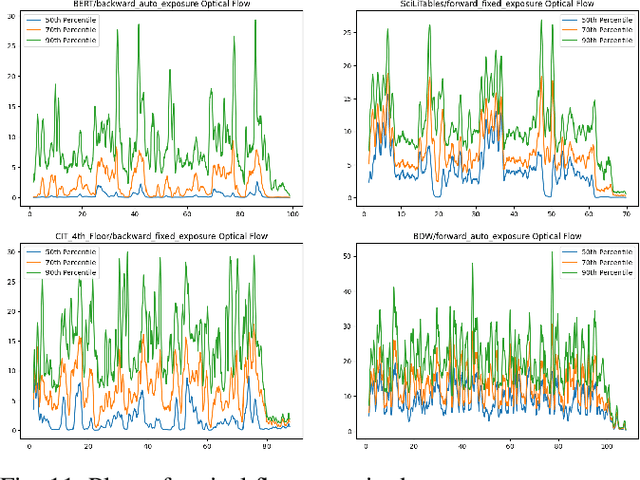

We present the Brown Pedestrian Odometry Dataset (BPOD) for benchmarking visual odometry algorithms in head-mounted pedestrian settings. This dataset was captured using synchronized global and rolling shutter stereo cameras in 12 diverse indoor and outdoor locations on Brown University's campus. Compared to existing datasets, BPOD contains more image blur and self-rotation, which are common in pedestrian odometry but rare elsewhere. Ground-truth trajectories are generated from stick-on markers placed along the pedestrian's path, and the pedestrian's position is documented using a third-person video. We evaluate the performance of representative direct, feature-based, and learning-based VO methods on BPOD. Our results show that significant development is needed to successfully capture pedestrian trajectories. The link to the dataset is here: \url{https://doi.org/10.26300/c1n7-7p93

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge