Behaviour-conditioned policies for cooperative reinforcement learning tasks

Paper and Code

Oct 04, 2021

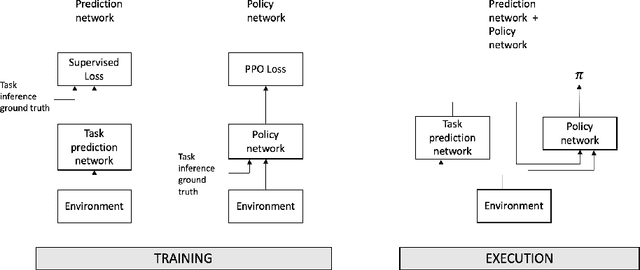

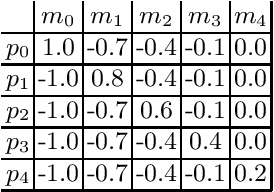

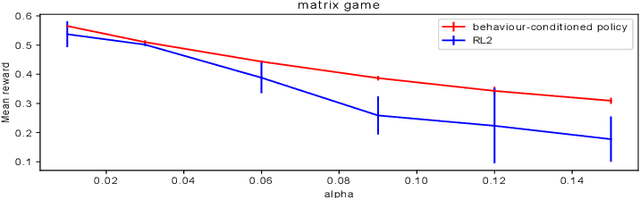

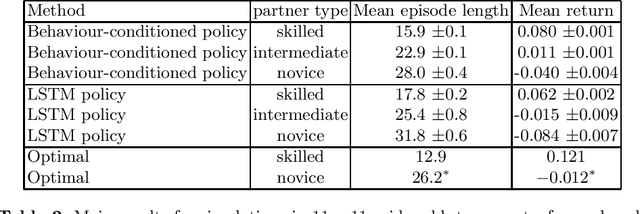

The cooperation among AI systems, and between AI systems and humans is becoming increasingly important. In various real-world tasks, an agent needs to cooperate with unknown partner agent types. This requires the agent to assess the behaviour of the partner agent during a cooperative task and to adjust its own policy to support the cooperation. Deep reinforcement learning models can be trained to deliver the required functionality but are known to suffer from sample inefficiency and slow learning. However, adapting to a partner agent behaviour during the ongoing task requires ability to assess the partner agent type quickly. We suggest a method, where we synthetically produce populations of agents with different behavioural patterns together with ground truth data of their behaviour, and use this data for training a meta-learner. We additionally suggest an agent architecture, which can efficiently use the generated data and gain the meta-learning capability. When an agent is equipped with such a meta-learner, it is capable of quickly adapting to cooperation with unknown partner agent types in new situations. This method can be used to automatically form a task distribution for meta-training from emerging behaviours that arise, for example, through self-play.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge