Bag of Tricks for Optimizing Transformer Efficiency

Paper and Code

Sep 09, 2021

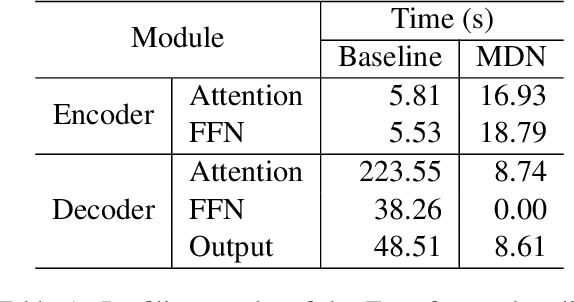

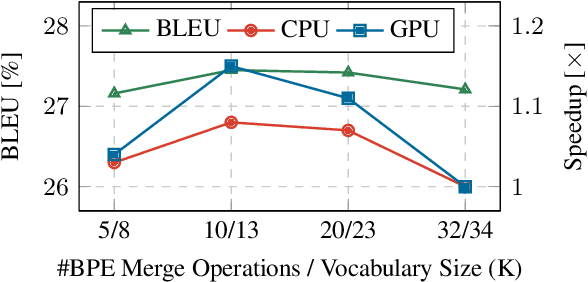

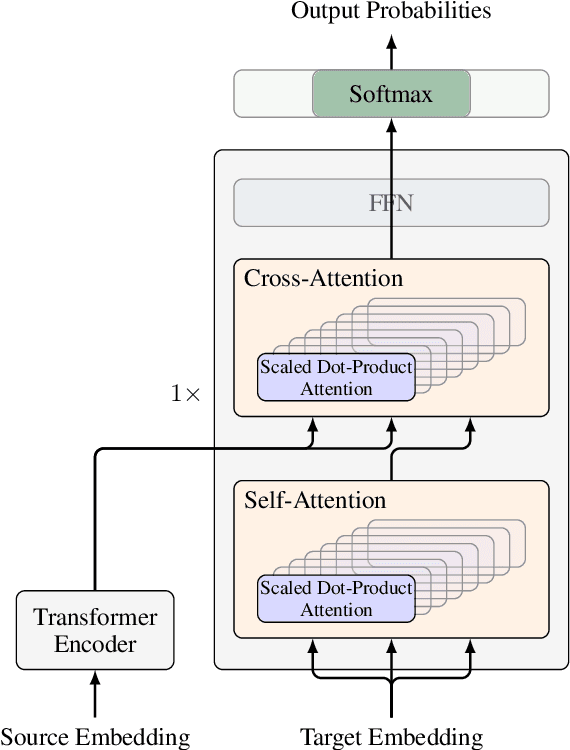

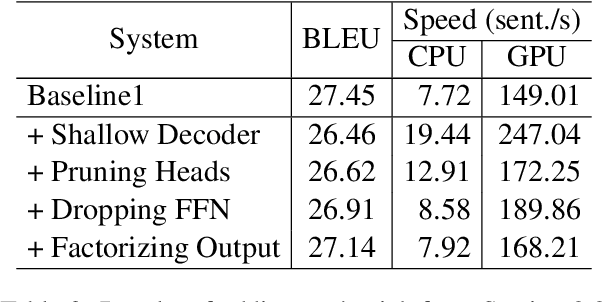

Improving Transformer efficiency has become increasingly attractive recently. A wide range of methods has been proposed, e.g., pruning, quantization, new architectures and etc. But these methods are either sophisticated in implementation or dependent on hardware. In this paper, we show that the efficiency of Transformer can be improved by combining some simple and hardware-agnostic methods, including tuning hyper-parameters, better design choices and training strategies. On the WMT news translation tasks, we improve the inference efficiency of a strong Transformer system by 3.80X on CPU and 2.52X on GPU. The code is publicly available at https://github.com/Lollipop321/mini-decoder-network.

* accepted by EMNLP (Findings) 2021

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge