Ax-BxP: Approximate Blocked Computation for Precision-Reconfigurable Deep Neural Network Acceleration

Paper and Code

Nov 25, 2020

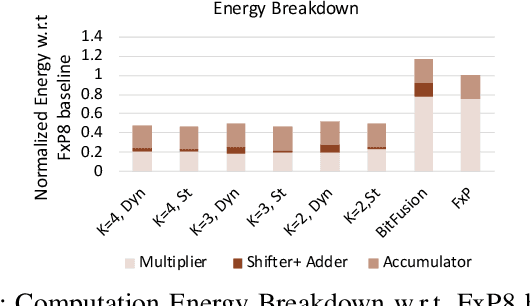

Precision scaling has emerged as a popular technique to optimize the compute and storage requirements of Deep Neural Networks (DNNs). Efforts toward creating ultra-low-precision (sub-8-bit) DNNs suggest that the minimum precision required to achieve a given network-level accuracy varies considerably across networks, and even across layers within a network, requiring support for variable precision in DNN hardware. Previous proposals such as bit-serial hardware incur high overheads, significantly diminishing the benefits of lower precision. To efficiently support precision re-configurability in DNN accelerators, we introduce an approximate computing method wherein DNN computations are performed block-wise (a block is a group of bits) and re-configurability is supported at the granularity of blocks. Results of block-wise computations are composed in an approximate manner to enable efficient re-configurability. We design a DNN accelerator that embodies approximate blocked computation and propose a method to determine a suitable approximation configuration for a given DNN. By varying the approximation configurations across DNNs, we achieve 1.11x-1.34x and 1.29x-1.6x improvement in system energy and performance respectively, over an 8-bit fixed-point (FxP8) baseline, with negligible loss in classification accuracy. Further, by varying the approximation configurations across layers and data-structures within DNNs, we achieve 1.14x-1.67x and 1.31x-1.93x improvement in system energy and performance respectively, with negligible accuracy loss.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge