Average Localised Proximity: a new data descriptor with good default one-class classification performance

Paper and Code

Jan 26, 2021

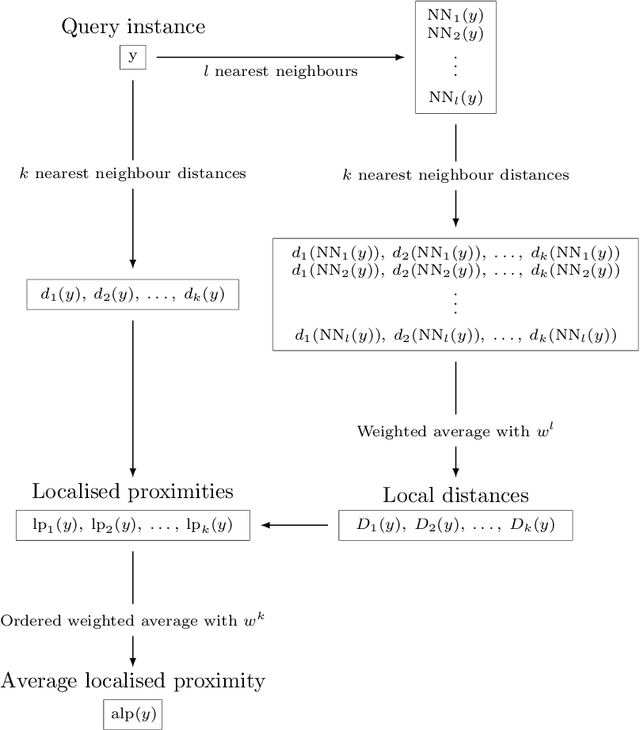

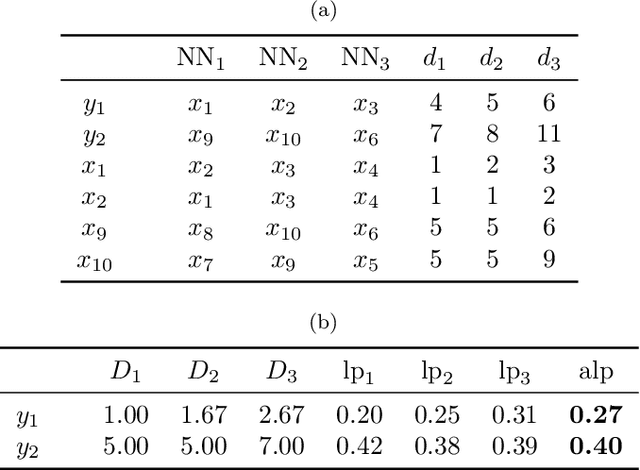

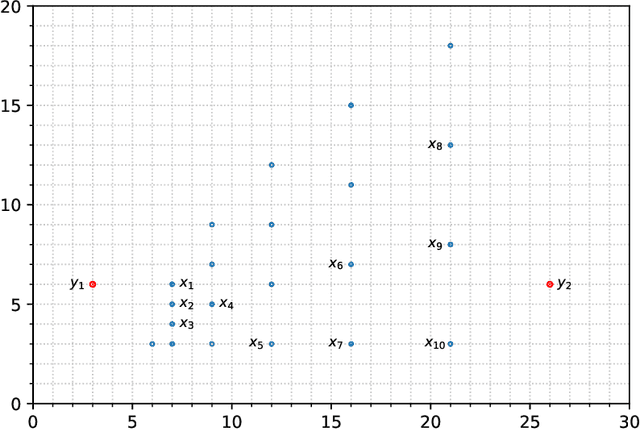

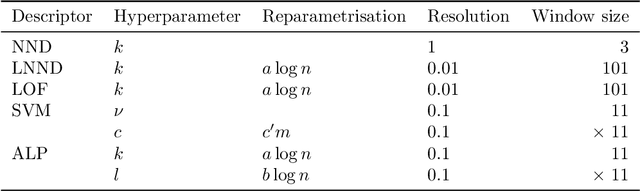

One-class classification is a challenging subfield of machine learning in which so-called data descriptors are used to predict membership of a class based solely on positive examples of that class, and no counter-examples. A number of data descriptors that have been shown to perform well in previous studies of one-class classification, like the Support Vector Machine (SVM), require setting one or more hyperparameters. There has been no systematic attempt to date to determine optimal default values for these hyperparameters, which limits their ease of use, especially in comparison with hyperparameter-free proposals like the Isolation Forest (IF). We address this issue by determining optimal default hyperparameter values across a collection of 246 one-class classification problems derived from 50 different real-world datasets. In addition, we propose a new data descriptor, Average Localised Proximity (ALP) to address certain issues with existing approaches based on nearest neighbour distances. Finally, we evaluate classification performance using a leave-one-dataset-out procedure, and find strong evidence that ALP outperforms IF and a number of other data descriptors, as well as weak evidence that it outperforms SVM, making ALP a good default choice.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge