Autonomous, Monocular, Vision-Based Snake Robot Navigation and Traversal of Cluttered Environments using Rectilinear Gait Motion

Paper and Code

Aug 19, 2019

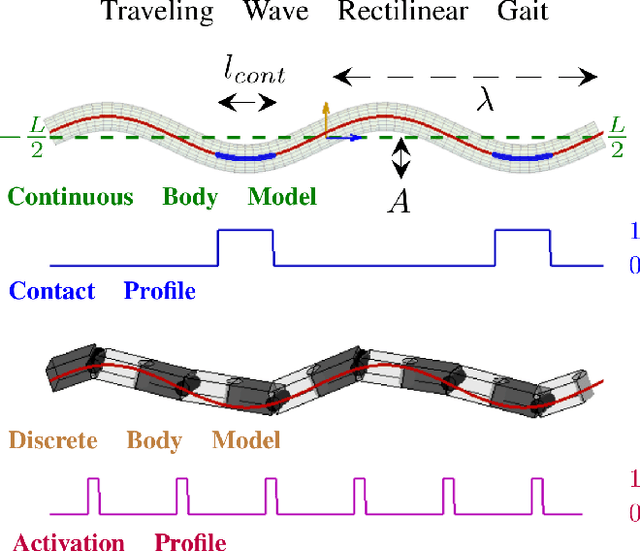

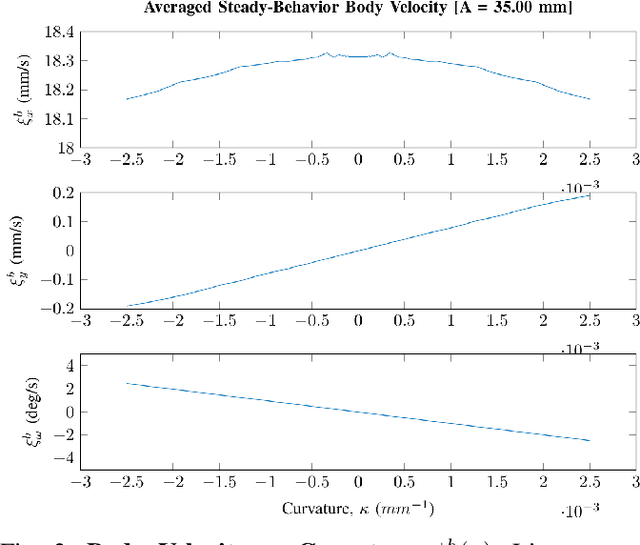

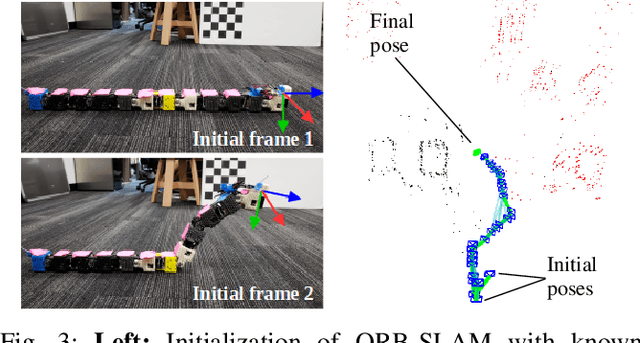

Rectilinear forms of snake-like robotic locomotion are anticipated to be an advantage in obstacle-strewn scenarios characterizing urban disaster zones, subterranean collapses, and other natural environments. The elongated, laterally-narrow footprint associated with these motion strategies is well-suited to traversal of confined spaces and narrow pathways. Navigation and path planning in the absence of global sensing, however, remains a pivotal challenge to be addressed prior to practical deployment of these robotic mechanisms. Several challenges related to visual processing and localization need to be resolved to to enable navigation. As a first pass in this direction, we equip a wireless, monocular color camera to the head of a robotic snake. Visiual odometry and mapping from ORB-SLAM permits self-localization in planar, obstacle-strewn environments. Ground plane traversability segmentation in conjunction with perception-space collision detection permits path planning for navigation. A previously presented dynamical reduction of rectilinear snake locomotion to a non-holonomic kinematic vehicle informs both SLAM and planning. The simplified motion model is then applied to track planned trajectories through an obstacle configuration. This navigational framework enables a snake-like robotic platform to autonomously navigate and traverse unknown scenarios with only monocular vision.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge