Autonomous Drone Swarm Navigation and Multi-target Tracking in 3D Environments with Dynamic Obstacles

Paper and Code

Feb 13, 2022

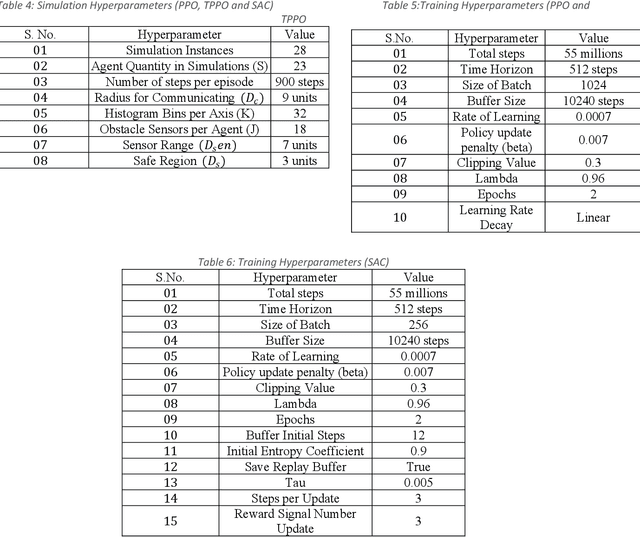

Autonomous modeling of artificial swarms is necessary because manual creation is a time intensive and complicated procedure which makes it impractical. An autonomous approach employing deep reinforcement learning is presented in this study for swarm navigation. In this approach, complex 3D environments with static and dynamic obstacles and resistive forces (like linear drag, angular drag, and gravity) are modeled to track multiple dynamic targets. Moreover, reward functions for robust swarm formation and target tracking are devised for learning complex swarm behaviors. Since the number of agents is not fixed and has only the partial observance of the environment, swarm formation and navigation become challenging. In this regard, the proposed strategy consists of three main phases to tackle the aforementioned challenges: 1) A methodology for dynamic swarm management, 2) Avoiding obstacles, Finding the shortest path towards the targets, 3) Tracking the targets and Island modeling. The dynamic swarm management phase translates basic sensory input to high level commands to enhance swarm navigation and decentralized setup while maintaining the swarms size fluctuations. While, in the island modeling, the swarm can split into individual subswarms according to the number of targets, conversely, these subswarms may join to form a single huge swarm, giving the swarm ability to track multiple targets. Customized state of the art policy based deep reinforcement learning algorithms are employed to achieve significant results. The promising results show that our proposed strategy enhances swarm navigation and can track multiple static and dynamic targets in complex dynamic environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge