Automatic structured variational inference

Paper and Code

Feb 03, 2020

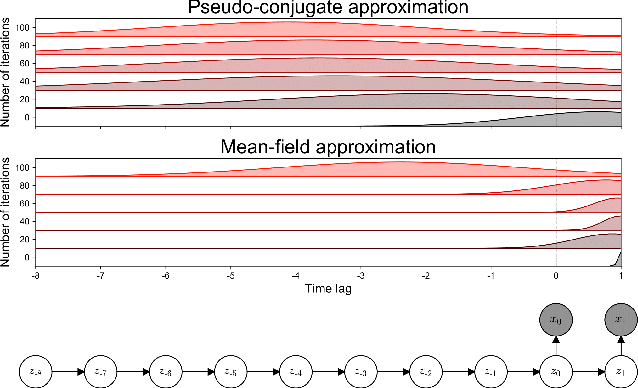

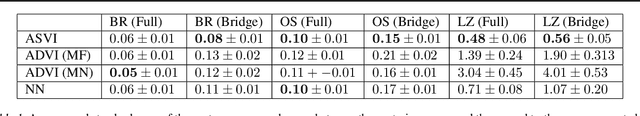

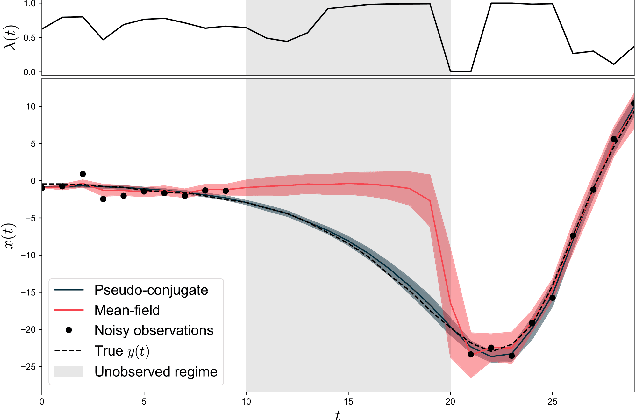

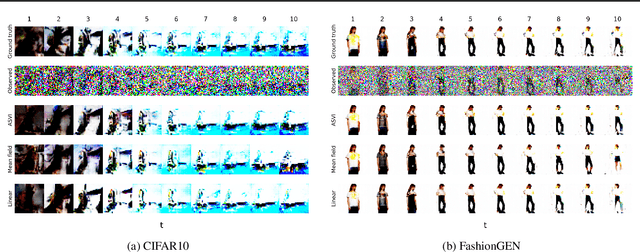

The aim of probabilistic programming is to automatize every aspect of probabilistic inference in arbitrary probabilistic models (programs) so that the user can focus her attention on modeling, without dealing with ad-hoc inference methods. Gradient based automatic differentiation stochastic variational inference offers an attractive option as the default method for (differentiable) probabilistic programming as it combines high performance with high computational efficiency. However, the performance of any (parametric) variational approach depends on the choice of an appropriate variational family. Here, we introduced a fully automatic method for constructing structured variational families inspired to the closed-form update in conjugate models. These pseudo-conjugate families incorporate the forward pass of the input probabilistic program and can capture complex statistical dependencies. Pseudo-conjugate families have the same space and time complexity of the input probabilistic program and are therefore tractable in a very large class of models. We validate our automatic variational method on a wide range of high dimensional inference problems including deep learning components.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge