Automatic Discovery of Novel Intents & Domains from Text Utterances

Paper and Code

May 22, 2020

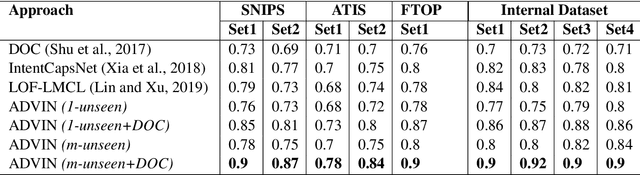

One of the primary tasks in Natural Language Understanding (NLU) is to recognize the intents as well as domains of users' spoken and written language utterances. Most existing research formulates this as a supervised classification problem with a closed-world assumption, i.e. the domains or intents to be identified are pre-defined or known beforehand. Real-world applications however increasingly encounter dynamic, rapidly evolving environments with newly emerging intents and domains, about which no information is known during model training. We propose a novel framework, ADVIN, to automatically discover novel domains and intents from large volumes of unlabeled data. We first employ an open classification model to identify all utterances potentially consisting of a novel intent. Next, we build a knowledge transfer component with a pairwise margin loss function. It learns discriminative deep features to group together utterances and discover multiple latent intent categories within them in an unsupervised manner. We finally hierarchically link mutually related intents into domains, forming an intent-domain taxonomy. ADVIN significantly outperforms baselines on three benchmark datasets, and real user utterances from a commercial voice-powered agent.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge