Automated Formal Synthesis of Lyapunov Neural Networks

Paper and Code

Mar 19, 2020

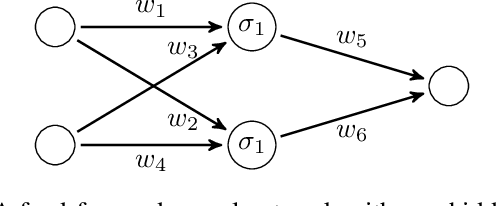

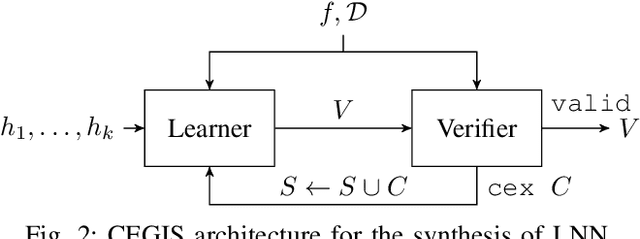

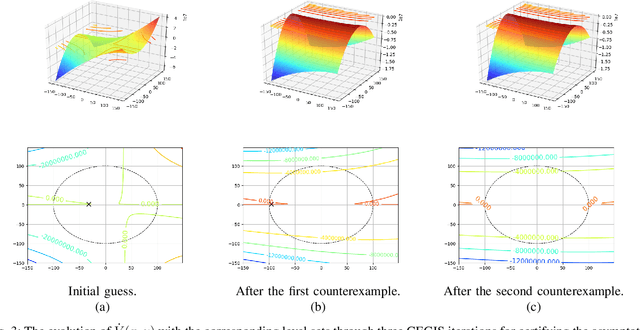

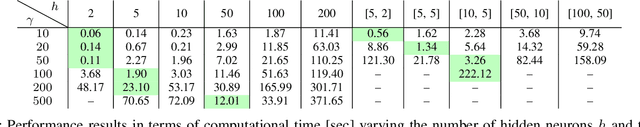

We propose an automated and sound technique to synthesize provably correct Lyapunov functions. We exploit a counterexample-guided approach composed of two parts: a learner provides candidate Lyapunov functions, and a verifier either guarantees the correctness of the candidate or offers counterexamples, which are used incrementally to further guide the synthesis of Lyapunov functions. Whilst the verifier employs a formal SMT solver, thus ensuring the overall soundness of the procedure, a neural network is used to learn and synthesize candidates over a domain of interest. Our approach flexibly supports neural networks of arbitrary size and depth, thus displaying interesting learning capabilities. In particular, we test our methodology over non-linear models that do not admit global polynomial Lyapunov functions, and compare the results against a cognate $\delta$-complete approach, and against an approach based on convex (SOS) optimization. The proposed technique outperforms these alternatives, synthesizing Lyapunov functions faster and over wider spatial domains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge